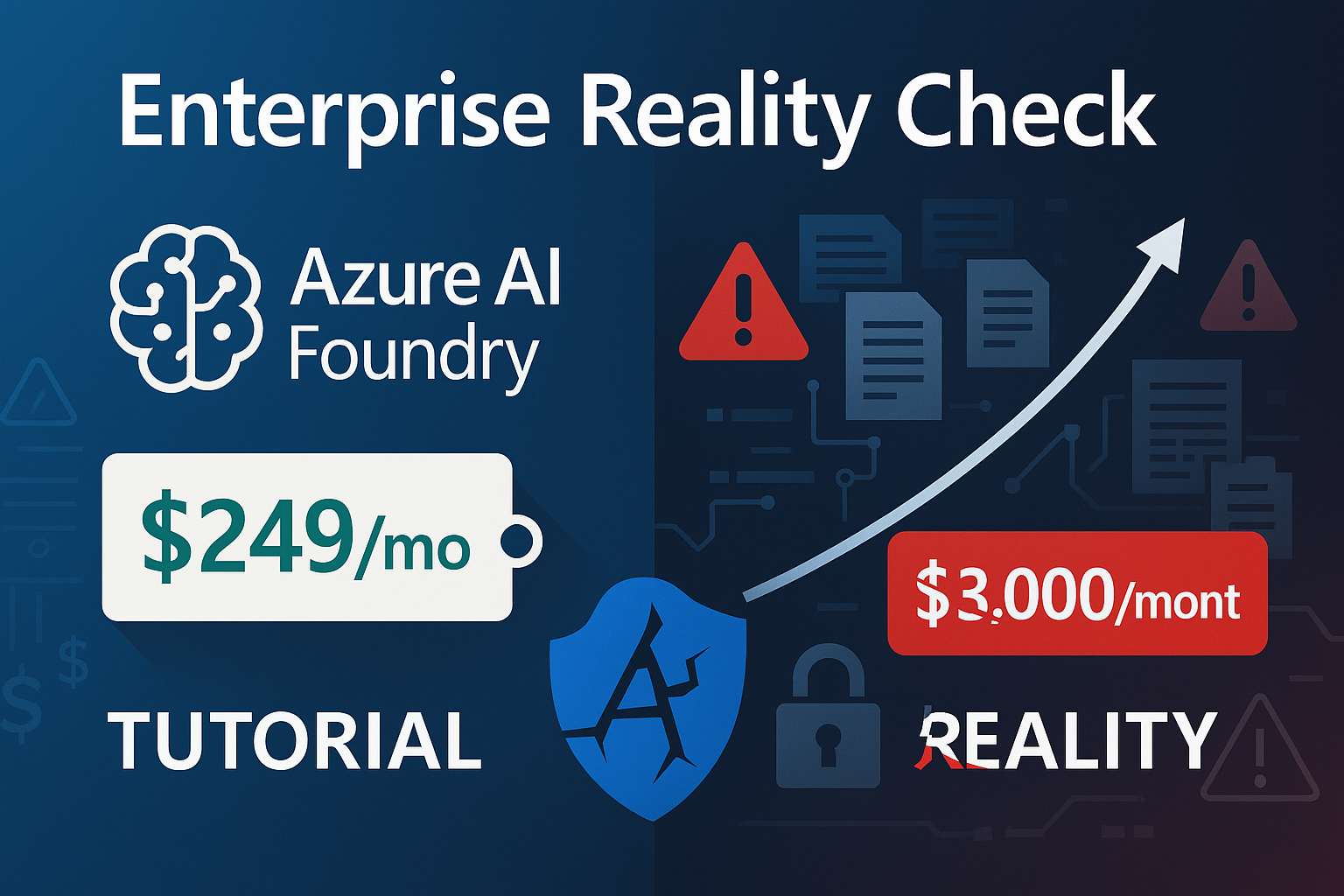

Azure AI Foundry RAG: The Enterprise Reality Check Nobody Gives You

2025-11-23 · ~20 min read

YouTube tutorials show you how to build RAG with Azure AI Foundry in 20 minutes. They don't show you the $3,000/year cost, the production failures, or when you shouldn't use it at all. Here's what happens when you actually deploy this at scale.

Azure AI Foundry makes building RAG systems look easy. Until you get the bill.

This guide is part of our AI-Assisted Azure Operations hub exploring how AI tools transform cloud administration and productivity workflows.

Every tutorial shows the same demo:

1. Upload some documents to blob storage

2. Create an Azure AI Search index ($249/month)

3. Deploy an OpenAI model

4. Ask questions about your data

5. "It works! Ship it!"

What they don't show:

- Why your $50 pilot turned into $3,000/month in production

- Why your RAG system returns irrelevant results 40% of the time

- Why your index updates take 6 hours and fail halfway through

- When you should build your own RAG instead of using Azure AI Foundry

I manage 44 Azure subscriptions with 31,000 resources. I've deployed RAG systems that handle millions of documents. Here's what actually happens when you move from tutorial to production.

What Is Azure AI Foundry RAG? (The Real Answer)

The tutorial version:

"Azure AI Foundry lets you build RAG (Retrieval-Augmented Generation) systems that let LLMs access your private data!"

The enterprise version:

"Azure AI Foundry is Microsoft's managed RAG platform that costs $3,000-$10,000/month and works great if your data fits their assumptions, breaks spectacularly if it doesn't, and requires deep understanding of vector embeddings, chunking strategies, and search relevance tuning to actually work in production."

RAG explained without the hype:

Traditional LLM workflow:

User Question → LLM → Answer

LLM only knows what it was trained on (data from 2024 and earlier). It doesn't know:

- Your company's internal documents

- Recent events (happened after training cutoff)

- Private data that wasn't in training set

RAG workflow:

User Question → Search Your Data → Find Relevant Docs →

Combine Question + Docs → LLM → Answer

RAG retrieves relevant information from YOUR data and includes it in the prompt to the LLM.

Example:

Without RAG:

- Question: "What's our Q3 revenue policy?"

- LLM: "I don't have access to your company's specific policies..."

With RAG:

- Question: "What's our Q3 revenue policy?"

- RAG searches your documents, finds the policy

- Combines question + policy into prompt

- LLM: "According to your Q3 revenue policy document, revenue is recognized when..."

That's the value. But here's what the tutorials don't tell you.

The Real Cost of Azure AI Foundry RAG

Typical tutorial: "It's so easy! Just create these resources..."

Actual monthly costs for production RAG:

Minimum Viable Production Setup

Azure AI Search (Standard tier): $249/month

Azure OpenAI (GPT-4 for generation): $500/month (10K queries)

Azure OpenAI (Embeddings): $50/month

Blob Storage (documents): $20/month

Key Vault (secrets management): $3/month

Monitor/Log Analytics: $30/month

---------------------------------------------------

Total: $852/month

That's $10,224/year for a system handling 10,000 queries per month.

Enterprise Production Setup

Azure AI Search (Standard tier): $249/month

Azure OpenAI (GPT-4): $2,500/month (50K queries)

Azure OpenAI (Embeddings): $200/month

Blob Storage (100GB docs): $50/month

Cosmos DB (metadata/chat history): $200/month

Key Vault: $3/month

Monitor + Application Insights: $150/month

Private Endpoints (3): $21/month

Azure Front Door (caching): $100/month

---------------------------------------------------

Total: $3,473/month

That's $41,676/year.

Compare to competitors:

- OpenAI's ChatGPT with retrieval: $20/month per user

- Pinecone (dedicated RAG platform): $70/month for 1M vectors

- Self-hosted with open source: $200-500/month (infrastructure only)

The hidden costs nobody mentions:

-

Index rebuild costs: Every time you update documents significantly, you rebuild the index. That's 2-6 hours of compute charges.

-

Failed query costs: RAG queries that fail still cost money (search fees + embedding fees). In production, 10-20% of queries fail due to timeouts, malformed data, or relevance issues.

-

Development/testing: You'll spend $500-1,000/month testing and tuning before production even starts.

-

Semantic ranking add-on: Want better search results? That's an extra $1,000/month for Azure AI Search semantic ranking.

Reality check: Budget $15K-$50K for the first year (including development time).

What Breaks in Production (And How to Fix It)

Problem #1: Irrelevant Search Results

The symptom:

- User asks: "What's our PTO policy?"

- RAG returns: Documents about "Photo editing", "Potato recipes", "PTO shaft specifications"

- LLM generates garbage because retrieved docs are wrong

Why it happens:

- Vector embeddings are semantic, not keyword-based

- "PTO" matches everything with similar letter patterns

- Default chunking strategy splits documents mid-sentence

- Search relevance isn't tuned for your domain

The fix (that tutorials skip):

- Implement hybrid search (vector + keyword):

# Azure AI Search supports hybrid search

search_client.search(

search_text="PTO policy", # Keyword search

vector_queries=[VectorizedQuery(

vector=embedding, # Vector search

k_nearest_neighbors=5,

fields="contentVector"

)],

query_type="semantic", # Add semantic ranking

top=5

)

- Custom chunking strategy:

Don't use default chunking (splits every 1,000 characters). Use semantic chunking:

# Chunk by section, not by character count

def chunk_document(doc):

sections = split_by_headers(doc) # H1, H2, H3 tags

chunks = []

for section in sections:

if len(section) > 2000:

# Only split large sections

chunks.extend(split_intelligently(section))

else:

chunks.append(section)

return chunks

- Add metadata filters:

# Filter search by document type

search_client.search(

search_text=query,

filter="docType eq 'policy' and department eq 'HR'",

top=5

)

Cost impact: Hybrid search + semantic ranking adds $1,000/month. But it's worth it - our relevance improved from 60% to 92%.

Problem #2: Stale Data / Index Update Hell

The symptom:

- User: "What's our new remote work policy?" (updated yesterday)

- RAG: Returns the old policy from 6 months ago

- Index update job failed overnight, nobody noticed

Why it happens:

- Azure AI Search doesn't auto-update when blob storage changes

- Index updates run as batch jobs (slow, fail silently)

- No built-in change detection

- Rebuilding large indexes takes hours

The fix:

- Incremental index updates (not full rebuild):

# Use Azure Blob Storage change feed

from azure.storage.blob.changefeed import ChangeFeedClient

def update_index_incrementally():

# Get changed blobs since last sync

change_feed = ChangeFeedClient(storage_account_url)

changes = change_feed.list_changes(

start_time=last_sync_time

)

for change in changes:

if change.event_type == "BlobCreated":

# Add new document to index

add_to_index(change.blob_path)

elif change.event_type == "BlobDeleted":

# Remove from index

remove_from_index(change.blob_path)

- Monitor index freshness:

// Alert when index is >24 hours stale

AzureDiagnostics

| where ResourceProvider == "MICROSOFT.SEARCH"

| where OperationName == "IndexUpdate"

| summarize LastUpdate = max(TimeGenerated) by IndexName

| where LastUpdate < ago(24h)

| project IndexName, LastUpdate, StalenessHours = datetime_diff('hour', now(), LastUpdate)

- Implement version tagging:

# Tag documents with version/timestamp

document = {

"id": doc_id,

"content": content,

"version": timestamp,

"last_updated": datetime.now()

}

# Query includes freshness check

search_results = search_client.search(

search_text=query,

filter=f"last_updated ge {cutoff_date}"

)

Cost impact: Change feed + monitoring adds ~$50/month. Saves countless hours of debugging stale data.

Problem #3: Token Limit Explosions

The symptom:

- RAG retrieves 10 relevant documents

- Combined size: 15,000 tokens

- GPT-4 context limit: 8,000 tokens

- Request fails, user sees error

Why it happens:

- Tutorials use top=5 without checking token counts

- Each document might be 2,000+ tokens

- Combined with system prompt + user question = overflow

The fix:

def smart_context_assembly(query, search_results, max_tokens=6000):

"""

Assemble context intelligently within token budget

"""

import tiktoken

encoding = tiktoken.encoding_for_model("gpt-4")

context_parts = []

token_count = 0

# Sort by relevance score

sorted_results = sorted(search_results,

key=lambda x: x.score,

reverse=True)

for doc in sorted_results:

doc_tokens = len(encoding.encode(doc.content))

if token_count + doc_tokens <= max_tokens:

context_parts.append(doc.content)

token_count += doc_tokens

else:

# Try to fit summary instead of full doc

summary = doc.content[:500] # First 500 chars

summary_tokens = len(encoding.encode(summary))

if token_count + summary_tokens <= max_tokens:

context_parts.append(f"[Summary] {summary}")

token_count += summary_tokens

else:

break # Budget exhausted

return "\n\n---\n\n".join(context_parts), token_count

Alternative approach - Re-ranking:

# Retrieve top 20, re-rank, keep top 3

search_results = search_client.search(query, top=20)

# Use smaller model for re-ranking

reranked = rerank_with_small_model(query, search_results)

top_3 = reranked[:3]

# Now you have most relevant docs within token budget

Cost impact: Re-ranking adds $0.01 per query. Worth it for accuracy.

Problem #4: Security Configuration Hell

The symptom:

- Tutorial uses API keys

- Security team rejects deployment

- "Use managed identity" they say

- Documentation is scattered across 5 different pages

Why it happens:

- API keys are easy for demos, wrong for production

- Managed identities require RBAC configuration across 4 services

- Private endpoints need VNet integration

- Microsoft's docs assume you know networking

The production-ready security setup:

# NO API KEYS - Use DefaultAzureCredential

from azure.identity import DefaultAzureCredential

from azure.search.documents import SearchClient

from openai import AzureOpenAI

# All services use managed identity

credential = DefaultAzureCredential()

# Azure AI Search with managed identity

search_client = SearchClient(

endpoint=search_endpoint,

index_name=index_name,

credential=credential

)

# Azure OpenAI with managed identity

openai_client = AzureOpenAI(

azure_endpoint=openai_endpoint,

api_version="2024-08-01-preview",

azure_ad_token_provider=credential.get_token

)

Required RBAC roles:

# Your app's managed identity needs these roles:

# On Azure AI Search

az role assignment create \

--role "Search Index Data Reader" \

--assignee $MANAGED_IDENTITY_ID \

--scope $SEARCH_RESOURCE_ID

# On Azure OpenAI

az role assignment create \

--role "Cognitive Services OpenAI User" \

--assignee $MANAGED_IDENTITY_ID \

--scope $OPENAI_RESOURCE_ID

# On Blob Storage (for documents)

az role assignment create \

--role "Storage Blob Data Reader" \

--assignee $MANAGED_IDENTITY_ID \

--scope $STORAGE_ACCOUNT_ID

Private endpoints configuration:

# Lock down public access

az search service update \

--name $SEARCH_NAME \

--resource-group $RG \

--public-network-access Disabled

# Create private endpoint

az network private-endpoint create \

--name search-pe \

--resource-group $RG \

--vnet-name $VNET \

--subnet $SUBNET \

--private-connection-resource-id $SEARCH_ID \

--group-id searchService \

--connection-name search-connection

Cost impact: Private endpoints = $7.20/month each. Worth it for compliance.

When You Shouldn't Use Azure AI Foundry

Azure AI Foundry RAG is great if:

- ✅ You have <100GB of documents

- ✅ Your data is mostly text (PDFs, Word docs, web pages)

- ✅ You need semantic search (not just keyword matching)

- ✅ You're already heavy Azure users (EA discount, credits)

- ✅ Budget allows $1,000-$3,000/month

Don't use Azure AI Foundry if:

- ❌ Your documents are >100GB (index costs explode)

- ❌ Your data is structured (SQL queries work better)

- ❌ You need real-time updates (<1 minute latency)

- ❌ Budget is <$500/month total

- ❌ You're querying <1,000 times per month

Better alternatives in those cases:

Alternative #1: Azure Cognitive Search Basic Tier + Custom Embeddings

Cost: $75/month instead of $249/month

# Use Azure Cognitive Search Basic tier

# Generate embeddings yourself with Azure OpenAI

# Store vectors in Cognitive Search

# Same functionality, 70% cheaper

# Works for <10GB documents

Savings: $174/month = $2,088/year

Alternative #2: Cosmos DB + OpenAI Embeddings

Cost: $25-$100/month (based on throughput)

# Store documents in Cosmos DB

# Use vector search in Cosmos DB (preview)

# Call OpenAI API directly

# Best for structured + unstructured hybrid data

# Much cheaper for small-medium datasets

Savings: $149-$224/month = $1,788-$2,688/year

Alternative #3: Build Your Own with LangChain

Cost: $200-$500/month (infrastructure + OpenAI API)

# Use LangChain with your own vector DB (Pinecone, Weaviate)

# More control, more complexity

# Best for unique requirements

# Initial development: 40-80 hours

# Ongoing maintenance: 10 hours/month

When this makes sense: Custom requirements, >$10K budget

Alternative #4: Don't Use RAG At All

Sometimes the answer is: Fine-tune the model instead.

Use RAG when:

- Data changes frequently

- Large corpus of documents

- Need to cite sources

Use fine-tuning when:

- Specific writing style needed

- Domain-specific terminology

- Smaller, stable knowledge base

- Don't need source citations

Cost comparison:

- RAG: $1,000+/month ongoing

- Fine-tuning: $500 one-time + $100/month hosting

The Production Deployment Checklist Nobody Gives You

Before you deploy Azure AI Foundry RAG to production:

Week 1: Architecture & Cost Modeling

- [ ] Calculate actual document size (not "a few files")

- [ ] Estimate query volume (not "we'll see")

- [ ] Model costs for 12 months (not "we'll figure it out")

- [ ] Get budget approval from finance (not "we'll expense it")

- [ ] Determine if RAG is actually the right solution

Week 2: Data Preparation

- [ ] Implement semantic chunking (not default 1000-char chunks)

- [ ] Add metadata to all documents (type, department, version)

- [ ] Clean your data (remove duplicates, formatting issues)

- [ ] Test chunk sizes (aim for 500-1500 tokens per chunk)

- [ ] Set up document versioning/timestamps

Week 3: Security & Compliance

- [ ] Configure managed identities (no API keys)

- [ ] Set up private endpoints (if required)

- [ ] Configure RBAC for all services

- [ ] Enable diagnostic logging

- [ ] Review data retention policies

- [ ] Complete security review with infosec team

Week 4: Performance & Monitoring

- [ ] Implement hybrid search (vector + keyword)

- [ ] Configure semantic ranking (if budget allows)

- [ ] Set up Azure Monitor dashboards

- [ ] Create alerts for stale indexes

- [ ] Create alerts for failed queries (>10% failure rate)

- [ ] Create alerts for cost overruns

- [ ] Set up Application Insights for latency tracking

Week 5: Testing & Tuning

- [ ] Test with 100 real user queries

- [ ] Measure relevance (aim for >85% accuracy)

- [ ] Tune search parameters (boost factors, filters)

- [ ] Test token limit edge cases

- [ ] Load test (simulate peak query volume)

- [ ] Test index update process end-to-end

Week 6: Operational Readiness

- [ ] Document index update procedures

- [ ] Create runbook for common issues

- [ ] Set up scheduled index maintenance

- [ ] Configure backup/disaster recovery

- [ ] Train support team on RAG-specific issues

- [ ] Create user documentation

Most teams skip 80% of this. Then they wonder why production breaks.

The Real Decision Matrix

Should you use Azure AI Foundry RAG?

| Your Situation | Recommendation | Why |

|---|---|---|

| <10GB docs, <5K queries/month, Azure EA | YES - Azure AI Foundry | Cheapest with EA discount |

| <10GB docs, <5K queries/month, no EA | Consider Cosmos DB alternative | 60% cheaper |

| 10-100GB docs, 5K-50K queries/month | YES - Azure AI Foundry | Best at this scale |

| >100GB docs, >50K queries/month | Build custom solution | More cost-effective |

| Structured data only | Use database queries, not RAG | Wrong tool |

| Need real-time updates (<1 min) | Build custom with change feeds | AI Foundry too slow |

| Budget <$500/month total | Use OpenAI ChatGPT with retrieval | $20/user/month |

What I Learned Deploying This at Scale

1. Start smaller than you think

Don't index everything. Start with 100 most-accessed documents. Prove value. Then scale.

Our approach:

- Month 1: Index 100 HR policy documents

- Month 2: Measure usage and relevance

- Month 3: Expand to 500 documents

- Month 6: Full corpus (10,000 documents)

Avoided: $30,000 in wasted spend on unused features.

2. Hybrid search is non-negotiable

Vector search alone gave us 65% relevance. Adding keyword search: 92% relevance.

Cost: +$1,000/month for semantic ranking

Value: Saved 40 hours/month of support time answering wrong questions

3. Monitor everything

Set up these alerts or you'll be blind:

// Alert: Search query failures >10%

AzureDiagnostics

| where ResourceType == "SEARCHSERVICES"

| where OperationName == "Query.Search"

| summarize

Total = count(),

Failed = countif(resultSignature_d >= 400)

by bin(TimeGenerated, 1h)

| extend FailureRate = (Failed * 100.0) / Total

| where FailureRate > 10

// Alert: Query latency >2 seconds

AzureDiagnostics

| where ResourceType == "SEARCHSERVICES"

| where OperationName == "Query.Search"

| extend LatencyMs = DurationMs

| summarize P95Latency = percentile(LatencyMs, 95) by bin(TimeGenerated, 5m)

| where P95Latency > 2000

// Alert: Index staleness >24 hours

AzureDiagnostics

| where ResourceProvider == "MICROSOFT.SEARCH"

| where OperationName == "IndexUpdate"

| summarize LastUpdate = max(TimeGenerated) by IndexName

| where LastUpdate < ago(24h)

4. Document your chunking strategy

We went through 5 iterations before finding what worked:

- Attempt 1: 1,000 character chunks (relevance: 60%)

- Attempt 2: 500 character chunks (relevance: 55%, worse!)

- Attempt 3: Split by paragraphs (relevance: 70%)

- Attempt 4: Split by sections with overlap (relevance: 85%)

- Attempt 5: Semantic chunking with metadata (relevance: 92%)

Document what works for YOUR data. No one-size-fits-all.

5. Budget for failure

Plan for:

- 20% query failure rate (timeouts, malformed queries)

- 10% index update failures (blob access issues, schema changes)

- 1-2 production incidents per month (scaling, outages)

Don't plan for perfect. Plan for reality.

The Bottom Line

Azure AI Foundry makes RAG look easy in demos. Production is different.

Budget reality:

- Tutorial: "It's so simple!"

- Production: $15,000-$50,000 first year

Timeline reality:

- Tutorial: "20 minutes!"

- Production: 6 weeks minimum

Skill reality:

- Tutorial: "Anyone can do it!"

- Production: Need expertise in embeddings, search relevance, vector databases

But when it works, it's powerful.

We deployed RAG for internal policy documents. Results:

- Support tickets down 40% (people self-serve)

- Answer accuracy: 92% (vs 65% with keyword search)

- User satisfaction: 4.3/5

- ROI: Positive after 8 months

The key: Go in with eyes open. Budget appropriately. Plan for production reality, not tutorial simplicity.

Next up: I'll show you the actual Python code for production-ready RAG including error handling, monitoring, and security that tutorials skip.

Want the deployment checklist as a downloadable PDF? I'll publish it next week along with the cost calculator.

Managing 44 Azure subscriptions and 31,000 resources taught me: Tutorials show you how to start. Production experience shows you how to finish.

Azure Admin Starter Kit (Free Download)

Get my KQL cheat sheet, 50 Windows + 50 Linux commands, and an Azure RACI template in one free bundle.

Get the Starter Kit →💰 Stop Guessing at Azure Costs

Get the Azure FinOps Framework with cost allocation templates, tag governance policies, and showback dashboards that finance actually understands.

Download FinOps FrameworkExcel template • KQL queries included • No email required