The Azure Arc Multi-vCenter Implementation Guide That Actually Works

2025-11-24 · ~51 min read

End-to-end implementation guide for connecting VMware vCenter to Azure Arc with governance in mind: tags, policy, RBAC, and reporting from day one.

This guide is part of our Azure Migration hub covering assessment, planning, execution, and post-migration optimization.

My boss asked me and my colleague to train the team on Azure Arc this morning. We're the only two people in the organization who understand Azure at scale. We just connected 1,200+ servers to Arc under pressure from Microsoft with no governance strategy.

Now we're supposed to teach others how to use it.

We're still laughing about it.

Here's why this is every enterprise Azure Arc deployment, and more importantly, here's the complete implementation guide that doesn't exist anywhere else.

The Reality Check: How Arc Deployments Actually Happen

What Should Happen:

1. Plan subscription and resource group strategy

2. Define tagging taxonomy with stakeholder input

3. Map cost centers and application owners

4. Design migration wave structure

5. Deploy Arc with governance from day one

What Actually Happens:

1. Microsoft sales calls every week about ESU deadlines

2. Leadership says "just get the servers connected, we'll figure out governance later"

3. You deploy everything to one subscription, one resource group, zero tags

4. Three months later: "Which department pays for these Arc licenses?"

5. You have no answer because there's no metadata

6. Now you're supposed to train people on a system you haven't properly architected

Figure 1: The "rushed Arc deployment" disaster - Everything crammed into one resource group with zero organization or tags

This pattern repeats across every enterprise I've talked to. Tactical pressure destroys strategic planning every single time.

The problem isn't the technology. Azure Arc works. The problem is nobody documents how to actually implement Arc at enterprise scale with proper governance.

This guide fixes that.

What is Azure Arc for VMware vCenter?

Quick Answer: Azure Arc for VMware vCenter connects your on-premises VMware infrastructure to Azure, enabling cloud-based management, security, and governance for VMs that stay in your datacenter.

How Azure Arc Works

Azure Arc uses a two-phase deployment model that most documentation glosses over:

Phase 1: Deploy Arc Resource Bridge

- A Kubernetes-based appliance VM deployed INTO your vCenter environment

- Connects your vCenter infrastructure to Azure cloud

- Makes VM inventory visible in Azure Portal

- Requirements: vCenter 7 or 8, 3 static IP addresses, 8GB RAM minimum

Phase 2: Install Arc Connected Machine Agents

- Individual agents installed on each VM you want to manage

- Enables guest OS management, ESU licensing, and Azure services

- Must be deployed WITH tags for proper cost allocation

What You Can Do With Arc

✅ ESU Licensing: Extend support for Windows Server 2012/R2 without migrating to Azure

✅ Unified Inventory: See all VMs from multiple vCenters in one Azure Portal view

✅ Azure Monitor: Collect logs and metrics from on-premises VMs

✅ Azure Policy: Apply governance and compliance policies to hybrid infrastructure

✅ Migration Planning: Use Azure Migrate to assess workloads before moving to cloud

✅ Cost Allocation: Tag VMs by department, application, or cost center for chargeback

Common Use Cases

Enterprise Scenarios:

- Managing 3+ vCenter instances (Production, Dev/Test, DR)

- Merger situations with acquired company vCenters

- Extended Security Updates for legacy Windows servers

- Multi-cloud inventory (Azure + VMware + AWS + GCP)

Deployment Timeline:

- POC (single vCenter, <50 VMs): 2-3 hours

- Enterprise (3 vCenters, 1,200+ VMs): 8-10 weeks including governance planning

Critical Success Factor: Tag your VMs DURING deployment, not after. Retroactive tagging requires metadata you don't have (cost centers, application owners, migration priorities).

Now let's talk about why most Arc deployments fail...

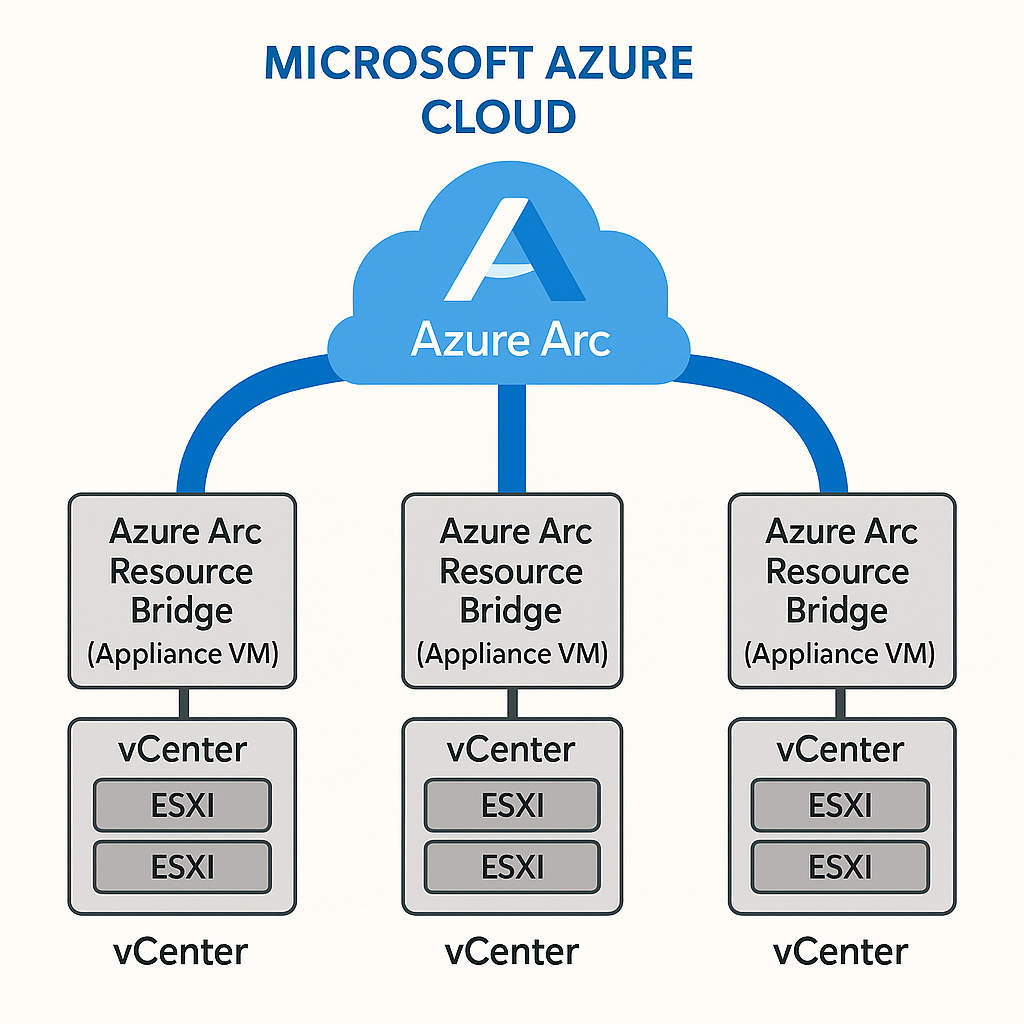

The Multi-vCenter Arc Architecture (The Goal)

Before we talk about what goes wrong, here's what proper multi-vCenter Arc architecture looks like:

The Goal: Each vCenter gets its own Arc Resource Bridge connecting to Azure Arc for unified management

This is the target architecture:

- Three vCenter environments (Production, Dev/Test, DR)

- Each vCenter has a dedicated Arc Resource Bridge (Kubernetes appliance VM)

- All bridges connect to Azure Arc cloud for unified management

- Clean separation allows independent lifecycle management

- Proper organization in Azure with resource groups and tags

Now let's look at what actually happens in most enterprises...

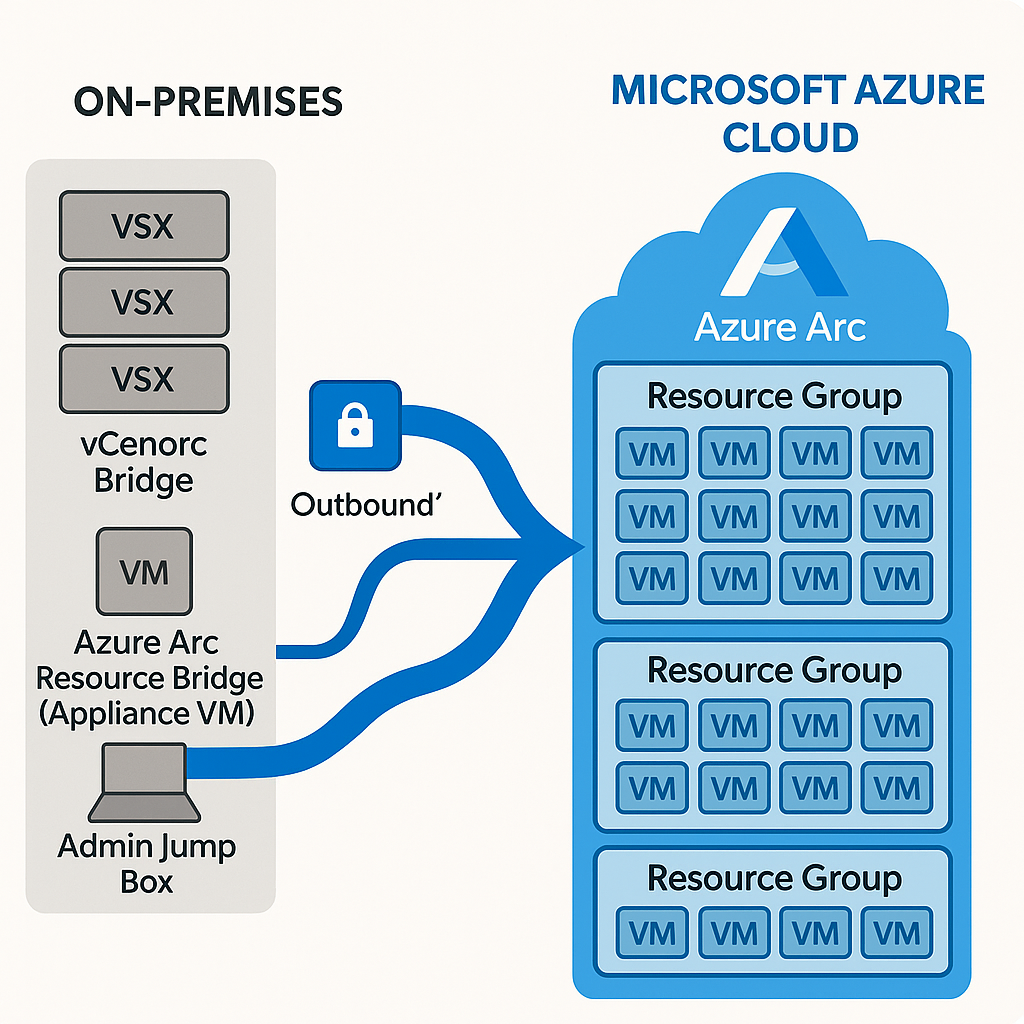

How Azure Arc Actually Connects to vCenter

Before we dive into implementation, here's what the architecture actually looks like:

What this diagram shows:

1. Left side (On-Premises): Your vCenter environment with VMs and the vCenter Server

2. Middle (The Bridge): Arc Resource Bridge appliance deployed FROM a jump box

3. Right side (Azure Cloud): Azure Arc services that manage your on-prem VMs

The critical flow:

- Jump box (admin workstation) runs deployment scripts

- Scripts create the Arc Resource Bridge as a Kubernetes appliance VM in vCenter

- Resource Bridge connects to Azure and registers your vCenter

- Azure Portal now shows vCenter inventory

- You then Arc-enable individual VMs for management

Keep this diagram in mind - it's the foundation for everything that follows.

Quick Start: Single vCenter POC (Skip the Enterprise Complexity)

If you're testing Arc with a single vCenter and <50 VMs, here's the fast path:

30-Minute POC Setup:

# 1. Deploy jump box (or use your admin workstation)

# Install prerequisites:

winget install Microsoft.Azure.CLI

winget install Microsoft.PowerShell

Install-Module VMware.PowerCLI -Force

az extension add --name arcappliance

az extension add --name connectedvmware

# 2. Create resource group

az group create --name arc-poc-rg --location eastus

# 3. Create Arc config (interactive prompts will guide you)

az arcappliance createconfig vmware `

--resource-group arc-poc-rg `

--name arc-poc `

--location eastus `

--out-dir ./arc-poc-config

# Edit arc-poc-config/config.yaml with your vCenter details

# (IP, credentials, network - takes 5 minutes)

# 4. Deploy Resource Bridge (30-45 minutes)

az arcappliance deploy vmware `

--config-file ./arc-poc-config/config.yaml `

--outfile ./arc-poc-config/kubeconfig

az arcappliance create vmware `

--config-file ./arc-poc-config/config.yaml `

--kubeconfig ./arc-poc-config/kubeconfig `

--resource-group arc-poc-rg `

--name arc-poc `

--location eastus

# 5. Connect vCenter

az connectedvmware vcenter connect `

--resource-group arc-poc-rg `

--name vcenter-poc `

--custom-location arc-poc-cl `

--location eastus `

--fqdn vcenter.company.local `

--username administrator@vsphere.local `

--password "YourPassword"

# 6. Enable guest management on 5 test VMs from Azure Portal

# (Click VM -> Enable guest management -> Apply)

# Done. You now have Arc visibility into vCenter.

POC Success Criteria:

- VMs visible in Azure Portal

- Can query VM inventory with Resource Graph

- Guest management enabled on test VMs

- Total setup time: 2-3 hours

When to graduate to enterprise deployment:

- You've proven Arc works in your environment

- Leadership approved budget

- You've collected tagging metadata (or accepted you'll fix it later)

- You're ready to deploy to 100+ VMs

For POCs, skip the tagging complexity. Get it working first, add governance when you scale.

Understanding Multi-vCenter Environments

Most enterprises don't have one vCenter. You have:

- Production vCenter

- Non-production vCenter (dev/test)

- DR/failover vCenter

- Potentially acquired company vCenters (merger scenarios)

Example architecture:

- vcenter-01 = Production workloads

- vcenter-02 = Development and test environments

- vcenter-03 = Disaster recovery site

Each vCenter manages hundreds to thousands of VMs. You need Azure Arc to:

- Get unified inventory across all vCenters

- Enable cloud management capabilities (monitoring, security, patching)

- Plan migration to Azure with proper visibility

- Allocate costs during hybrid state (on-prem + Azure)

Figure 2: What proper Arc organization looks like - Multiple resource groups, clear structure, organized by purpose

But here's what Microsoft doesn't explain clearly: connecting vCenter to Azure is a two-phase process.

The Two-Phase Deployment Nobody Explains

This is where everyone gets confused. Azure Arc for VMware has two distinct deployment phases.

But before you can even start Phase 1, you need a jump box.

Phase 0: Deploy the Jump Box (The Prerequisite Nobody Mentions)

Before you can deploy the Arc Resource Bridge, you need a Windows VM in your vCenter environment to run the deployment scripts from.

What the jump box is:

- A Windows Server 2019/2022 or Windows 10/11 VM

- Deployed IN your vCenter environment (not in Azure, not on your laptop)

- Has network access to vCenter (port 443)

- Has outbound internet access to Azure and GitHub

- Serves as the deployment tool for Arc Resource Bridge

Why you need it:

- The Arc deployment scripts must run from a system WITH network access to vCenter

- You can't deploy from Azure Cloud Shell (no vCenter access)

- You can't deploy from your laptop over VPN (too slow, will timeout)

- The scripts download a large OVA file and deploy it into vCenter

Jump box specifications:

- 2-4 vCPU

- 8GB RAM

- 50GB disk (minimum 20GB free for OVA download)

- Windows Server 2019+ or Windows 10/11

- Network connectivity to vCenter management network

- Outbound HTTPS to internet

Software to install on jump box:

# Install Azure CLI

Invoke-WebRequest -Uri https://aka.ms/installazurecliwindows -OutFile .\AzureCLI.msi

Start-Process msiexec.exe -Wait -ArgumentList '/I AzureCLI.msi /quiet'

# Install PowerShell 7

winget install Microsoft.PowerShell

# Install VMware PowerCLI

Install-Module -Name VMware.PowerCLI -Force -AllowClobber

# Install Arc appliance extensions

az extension add --name arcappliance

az extension add --name connectedvmware

# Login to Azure

az login

One jump box can deploy multiple Resource Bridges:

You can use the same jump box to deploy Arc Resource Bridges for vcenter-01, vcenter-02, and vcenter-03. You don't need a separate jump box per vCenter.

Phase 1: Deploy the Arc Resource Bridge (FROM the Jump Box)

The Arc Resource Bridge connects your vCenter infrastructure to Azure. Think of it as the "connector" layer.

What the Resource Bridge actually is:

- A Kubernetes-based appliance VM deployed INTO your vCenter environment

- Runs as a VM in vCenter (minimum 4 vCPU, 8GB RAM - Microsoft recommends 16GB for production)

- Requires 3 static IP addresses (2 for appliance VMs, 1 for control plane endpoint)

- Provides the connection between vCenter and Azure

- Deployed from a Windows jump box that has network access to vCenter

What the Resource Bridge does:

- Authenticates to your vCenter instance

- Discovers VMs in vCenter inventory

- Makes VMs visible in Azure Portal

- Enables self-service VM operations from Azure

What the Resource Bridge does NOT do:

- Install agents on VMs

- Enable guest management

- Enable ESU licensing

- Enable Azure Monitor or security features

The Deployment Reality:

You run the deployment script FROM a jump box (Windows VM) IN the vCenter environment. The script:

1. Downloads the Arc Resource Bridge appliance OVA

2. Deploys it as a VM into vCenter

3. Configures the Kubernetes cluster inside the appliance

4. Connects the appliance to Azure

5. Registers your vCenter with Azure Arc

Prerequisites for the jump box:

- Windows Server 2019/2022 or Windows 10/11

- Network access to vCenter (port 443)

- Network access to Azure (outbound HTTPS)

- Azure CLI installed

- PowerShell 7+

- 20GB free disk space (for OVA download)

How to deploy from the jump box:

# Run this FROM your vCenter jump box

# Install prerequisites

Install-Module -Name VMware.PowerCLI -Force

az extension add --name arcappliance

az extension add --name connectedvmware

# Create config file for vcenter-01

az arcappliance createconfig vmware `

--resource-group arc-infrastructure-rg `

--name arc-bridge-vcenter-01 `

--location eastus `

--out-dir ./vcenter-01-config

# Edit the generated config.yaml with your vCenter details:

# - vCenter IP/hostname

# - vCenter credentials (service account)

# - Network configuration for the appliance VM

# - Datastore for appliance deployment

# Deploy the Resource Bridge (this takes 30-45 minutes)

az arcappliance deploy vmware `

--config-file ./vcenter-01-config/config.yaml `

--outfile ./vcenter-01-config/kubeconfig

# Create the Azure Arc connection

az arcappliance create vmware `

--config-file ./vcenter-01-config/config.yaml `

--kubeconfig ./vcenter-01-config/kubeconfig `

--resource-group arc-infrastructure-rg `

--name arc-bridge-vcenter-01 `

--location eastus

# Connect your vCenter to Azure

az connectedvmware vcenter connect `

--resource-group arc-infrastructure-rg `

--name vcenter-01 `

--custom-location arc-bridge-vcenter-01-cl `

--location eastus `

--fqdn vcenter-01.company.local `

--username svc-arc@company.local `

--password $vCenterPassword

# Repeat entire process for vcenter-02 and vcenter-03

What you'll see in vCenter:

After deployment, you'll have a new VM in vCenter named something like arc-bridge-vcenter-01-appliance. This is the Resource Bridge running Kubernetes. Don't delete it or power it off.

What you'll see in Azure Portal:

Your vCenter appears as a resource in Azure. All VMs from that vCenter are now visible in Azure Portal under "Azure Arc > VMware vCenters".

Common mistakes:

-

Wrong network configuration: The appliance VM needs an IP address in your vCenter network. If you configure the wrong subnet, deployment fails 30 minutes in.

-

Insufficient vCenter permissions: The service account needs these permissions:

- Read-only on VMs

- Deploy OVF template

-

Create/delete VMs (for the appliance)

-

Firewall blocking deployment: The jump box needs outbound HTTPS to:

- vCenter (port 443)

- Azure (management.azure.com, login.microsoftonline.com)

-

GitHub (to download OVA)

-

Running from Azure instead of on-premises: The deployment script must run from a jump box WITH network access to vCenter. You can't deploy from Azure Cloud Shell or your laptop over VPN (too slow, will timeout).

-

Thinking you're done: People deploy the Resource Bridge, see VMs in Azure Portal, and think Arc is working. Wrong. The VMs are visible but not Arc-enabled. You're looking at VMware inventory reflected in Azure, not Arc-managed resources.

Phase 2: Deploy Arc Agents to Individual VMs

After the Resource Bridge is deployed, you need to Arc-enable each VM by installing the Connected Machine agent.

What the Arc agent does:

- Enables guest OS management from Azure

- Enables ESU licensing (for Windows Server 2012/R2)

- Enables Azure Monitor integration

- Enables Azure Policy guest configuration

- Enables extension management (security, backup, etc.)

Critical insight: The Resource Bridge makes VMs discoverable. The Arc agent makes them manageable.

How to deploy at scale:

# Script to deploy Arc agents to VMs from vcenter-01

$ServicePrincipalId = "YOUR-SP-ID"

$ServicePrincipalSecret = "YOUR-SP-SECRET" | ConvertTo-SecureString -AsPlainText -Force

$TenantId = "YOUR-TENANT-ID"

$SubscriptionId = "YOUR-SUBSCRIPTION-ID"

$ResourceGroup = "arc-vcenter-01-rg"

$Location = "eastus"

# Tags to apply during onboarding - THIS IS CRITICAL

$Tags = @{

"vcenter-source" = "vcenter-01"

"environment" = "production"

"migration-wave" = "wave-2"

"cost-center" = "IT-INFRASTRUCTURE"

}

# Deploy to target VMs

$VMs = Get-VM -Location vcenter-01 | Where-Object {$_.PowerState -eq "PoweredOn"}

foreach ($VM in $VMs) {

Invoke-VMScript -VM $VM -ScriptText @"

# Download Arc agent

Invoke-WebRequest -Uri 'https://aka.ms/AzureConnectedMachineAgent' -OutFile AzureConnectedMachineAgent.msi

# Install agent

msiexec /i AzureConnectedMachineAgent.msi /quiet /norestart

# Connect to Azure with tags

& "$env:ProgramFiles\AzureConnectedMachineAgent\azcmagent.exe" connect `

--service-principal-id $ServicePrincipalId `

--service-principal-secret $ServicePrincipalSecret `

--tenant-id $TenantId `

--subscription-id $SubscriptionId `

--resource-group $ResourceGroup `

--location $Location `

--tags "vcenter-source=vcenter-01,environment=production,migration-wave=wave-2,cost-center=IT-INFRASTRUCTURE"

"@

}

Why this matters: If you deploy the Resource Bridge but don't install agents, you get Azure Portal visibility but zero management capability. People will ask "why aren't my VMs getting ESU patches?" and the answer is "because you only completed phase 1."

The Tagging Strategy That Prevents Disasters

Tags are not optional. Tags are the only way to organize multi-vCenter environments in Azure.

Without tags, you have 1,200 VMs in Azure Portal with names like:

- SQLPROD03

- WEBSERVER17

- APPVM-2024-NEW

Good luck figuring out:

- Which vCenter is this VM from?

- Is this production or dev?

- Which business unit pays for this?

- What application does it run?

- What's the migration priority?

Required Tag Schema

Apply these tags during Arc agent deployment, not after:

vcenter-source: "vcenter-01" | "vcenter-02" | "vcenter-03"

# Which vCenter instance is this VM from?

# Critical for migration wave planning

environment: "production" | "development" | "test" | "dr-failover"

# Determines migration priority and support criticality

cost-center: "FINANCE-001" | "IT-INFRASTRUCTURE" | "SALES-EAST"

# Required for chargeback and budget allocation

app-owner: "john.smith@company.com" | "finance-team@company.com"

# Who to contact about this workload

application: "erp-system" | "crm-prod" | "internal-portal"

# What business function does this support

migration-wave: "wave-1" | "wave-2" | "wave-3" | "not-planned"

# Deployment sequence for Azure migration

azure-ready: "yes" | "no" | "needs-assessment"

# Can this VM migrate to Azure as-is?

compliance-scope: "sox" | "pci" | "hipaa" | "none"

# Regulatory requirements affecting architecture

Why Tag During Deployment, Not After

Wrong approach:

1. Deploy Arc agents to all VMs (no tags)

2. "We'll add tags later when we have time"

3. Three months pass

4. Finance asks for cost breakdown by department

5. You have 1,200 untagged VMs and no way to retroactively determine ownership

Right approach:

1. Collect tag data BEFORE deploying Arc (see next section)

2. Deploy Arc agents WITH tags in the deployment script

3. Tags appear in Azure Portal immediately

4. Resource Graph queries work from day one

The reality: Retroactive tagging is brutally hard. You'll need to:

- Match VM names to application databases (often incomplete)

- Email application owners (who don't respond)

- Cross-reference cost center codes (which changed last year)

- Make educated guesses (which finance will reject)

Tag during deployment or accept that you'll never have complete metadata.

The Real Bottleneck: Data Collection

Here's what nobody tells you about Azure Arc implementation: the technology is the easy part.

Installing the Arc Resource Bridge takes 30 minutes. Installing agents on 1,200 VMs takes a weekend with scripting.

Getting the data to tag those VMs properly takes three months.

What You Need to Collect

Before you can deploy Arc with proper governance, you need:

From Finance:

- Valid cost center codes for each department

- Budget allocation model (centralized vs. chargeback)

- Approval for new Azure spending categories

- Expected monthly costs for Arc management

From Application Teams:

- Application inventory (what runs on each VM?)

- Business owner contacts

- Criticality ratings (tier 1/2/3)

- Support expectations

From Infrastructure Teams:

- vCenter inventory exports

- Network dependencies between VMs

- Backup and DR requirements

- Patching schedules and maintenance windows

From Security/Compliance:

- Regulatory scope for each application (SOX, PCI, HIPAA)

- Approval for Arc agent installation (new software on servers)

- Required security configurations

- Audit requirements

From Business Leadership:

- Cloud migration strategy

- Timeline and priorities

- Budget constraints

- Success criteria

Why This Takes Forever

The email approach doesn't work:

- Finance doesn't respond (they're busy with quarter close)

- Application teams don't track ownership (knowledge left with Bob who retired)

- Security wants committee approval (meeting scheduled for next month)

The meeting approach is slow:

- Scheduling 8 stakeholder calendars takes two weeks

- Half the attendees don't show up

- Decisions require "further discussion"

- Action items get lost in email threads

The phone call approach works but is exhausting:

- Call Finance: "I need cost center codes for 1,200 VMs"

- They ask: "Can you send a spreadsheet?"

- You send spreadsheet with VM names

- They respond: "We don't recognize these VM names, can you map to applications?"

- You don't have application mapping

- Call application teams...

- (Repeat for three months)

Budget 2-3 weeks minimum for data collection. For complex environments with poor documentation, budget 2-3 months.

The Arc agent installs in 5 minutes. Getting the metadata to tag it properly is the actual project.

The Complete Implementation Checklist

Here's the step-by-step process that actually works.

Phase 0: Pre-Deployment Planning (Week 1-4)

☐ Document Current State

☐ List all vCenter instances and versions

☐ Export VM inventory from each vCenter

☐ Document VM counts: production, dev/test, DR

☐ Identify applications running on VMs (as best you can)

☐ Note VMs with special requirements (GPU, high memory, etc.)

☐ Define Azure Organization Strategy

☐ Subscription model: one sub for all Arc? One per vCenter? One per business unit?

☐ Resource group model: by vCenter? By application? By environment?

☐ Naming convention for Arc resources

☐ RBAC model: who can deploy? Who can manage? Who can view?

☐ Build Tagging Taxonomy

☐ Define required tags (see schema above)

☐ Get Finance approval on cost-center codes

☐ Get Security approval on compliance-scope values

☐ Document tag enforcement policy

☐ Collect Metadata (THE HARD PART)

☐ Call Finance for cost center mapping

☐ Call application teams for ownership data

☐ Call business units for migration priorities

☐ Map VMs to applications (manually if needed)

☐ Build master spreadsheet with all tag values

☐ Get Approvals

☐ Budget approval for Arc licensing costs

☐ Security approval for agent installation

☐ Change control approval for production VMs

☐ Business unit sign-off on migration plan

Reality check: This phase takes longer than the actual deployment. Don't skip it. Deploying Arc without this planning creates an unfixable mess.

Phase 0: Jump Box Deployment (Week 4)

☐ Deploy Jump Box VM in vCenter

☐ Create new Windows Server 2019/2022 VM in vCenter (or identify existing)

☐ Allocate 2-4 vCPU, 8GB RAM, 50GB disk

☐ Place on network with access to vCenter management interface

☐ Configure outbound internet access (direct or via proxy)

☐ Install Windows updates

☐ Join to domain (if required for your environment)

☐ Install Required Software on Jump Box

☐ Install Azure CLI (az)

☐ Install PowerShell 7+

☐ Install VMware PowerCLI module

☐ Install Azure Arc appliance extensions (arcappliance, connectedvmware)

☐ Test Azure connectivity (az login)

☐ Test vCenter connectivity (Connect-VIServer)

☐ Validate Jump Box Prerequisites

☐ Verify 20GB+ free disk space for OVA downloads

☐ Verify network access to vCenter (port 443)

☐ Verify outbound HTTPS to Azure (management.azure.com, login.microsoftonline.com)

☐ Verify outbound HTTPS to GitHub (to download OVA files)

☐ Test RDP access to jump box for deployment sessions

### Phase 1: Arc Resource Bridge Deployment (Week 5)

☐ Azure Infrastructure Setup

☐ Create subscriptions (if using multiple)

☐ Create resource groups for Arc infrastructure

☐ Create service principals for Arc authentication

☐ Assign RBAC permissions (custom roles may be required)

☐ Configure Azure Policy for Arc governance

☐ Prepare vCenter for Arc

☐ Create service account with required permissions:

☐ Read-only on VMs

☐ Deploy OVF template

☐ Create/delete VMs

☐ Identify datastore for appliance VM deployment

☐ Identify network/VLAN for appliance VM (needs IP address)

☐ Reserve static IP or configure DHCP reservation for appliance

☐ Deploy Arc Resource Bridge - vcenter-01 (Production)

☐ RDP to jump box in vCenter environment

☐ Run az arcappliance createconfig to generate config files

☐ Edit config.yaml with vCenter details (IP, credentials, network, datastore)

☐ Run az arcappliance deploy (takes 30-45 minutes)

☐ Run az arcappliance create to connect to Azure

☐ Run az connectedvmware vcenter connect to register vCenter

☐ Validate appliance VM appears in vCenter

☐ Validate vCenter resource appears in Azure Portal

☐ Verify VM inventory appears in Azure Portal

☐ Deploy Arc Resource Bridge - vcenter-02 (Non-Production)

☐ Repeat deployment process

☐ Use separate resource group or naming convention

☐ Validate connection and inventory

☐ Deploy Arc Resource Bridge - vcenter-03 (DR Site)

☐ Repeat deployment process

☐ Tag infrastructure with dr-site designation

☐ Validate connection and inventory

☐ Validate Resource Bridge Deployment

☐ All VMs from all vCenters visible in Azure Portal

☐ VM metadata (CPU, memory, OS) populated correctly

☐ Resource Bridge health status: healthy

☐ No authentication or connectivity errors

### Phase 2: Arc Agent Deployment with Tags (Week 6-8)

☐ Prepare Agent Deployment

☐ Load metadata spreadsheet with tag values

☐ Create deployment scripts with tag application

☐ Test agent deployment on 5 VMs from each vCenter

☐ Validate tags appear correctly in Azure Portal

☐ Test Resource Graph queries for filtering by tags

☐ Deploy Arc Agents - Wave 1 (vcenter-02 Non-Production)

☐ Deploy agents to development VMs first

☐ Apply all required tags during deployment

☐ Monitor for deployment failures (network, permissions, TLS issues)

☐ Validate agent connectivity and health

☐ Verify tags in Azure Portal and Resource Graph

☐ Wait 48 hours to observe for issues

☐ Deploy Arc Agents - Wave 2 (vcenter-03 DR Site)

☐ Deploy to DR VMs

☐ Monitor for performance impact (DR sites often have lower specs)

☐ Validate agent health

☐ Verify no impact to DR replication or failover capability

☐ Deploy Arc Agents - Wave 3 (vcenter-01 Production)

☐ Schedule during maintenance window

☐ Deploy in batches (200 VMs at a time)

☐ Monitor application health after each batch

☐ Have rollback plan ready (uninstall script)

☐ Complete deployment over 2-3 weeks to minimize risk

**Common deployment failures:**

**TLS 1.2 not enabled (Windows Server 2012):**

```powershell

# Enable TLS 1.2 before Arc agent installation

Set-ItemProperty -Path 'HKLM:\SOFTWARE\Microsoft\.NETFramework\v4.0.30319' -Name 'SchemeUseStrongCrypto' -Value 1 -Type DWord

Set-ItemProperty -Path 'HKLM:\SOFTWARE\Wow6432Node\Microsoft\.NETFramework\v4.0.30319' -Name 'SchemeUseStrongCrypto' -Value 1 -Type DWord

Restart-Computer -Force

Firewall blocking Arc endpoints:

Required outbound HTTPS (443) to:

- management.azure.com

- login.microsoftonline.com

- *.guestconfiguration.azure.com

- *.his.arc.azure.com

- *.servicebus.windows.net

Permission errors:

Verify service principal has these permissions:

- Microsoft.HybridCompute/machines/read

- Microsoft.HybridCompute/machines/write

- Microsoft.HybridCompute/machines/extensions/write

Phase 3: Validation and Governance (Week 9-10)

☐ Validate Complete Deployment

☐ All VMs have Arc agents installed

☐ All VMs have required tags applied

☐ Agent health status: connected

☐ No error states in Azure Portal

☐ Test Resource Graph Queries

☐ Query by vcenter-source (can you filter by vCenter?)

☐ Query by cost-center (can Finance generate reports?)

☐ Query by migration-wave (can you plan deployment sequences?)

☐ Query by compliance-scope (can Security audit regulatory scope?)

☐ Enable Arc Features

☐ Azure Monitor integration (if required)

☐ Azure Policy guest configuration

☐ Azure Update Manager for patching

☐ ESU licensing (for Windows Server 2012/R2)

☐ Microsoft Defender for Cloud integration

☐ Set Up Monitoring and Alerting

☐ Alert on Arc agent disconnections

☐ Alert on agent health failures

☐ Monitor Arc infrastructure costs

☐ Track agent deployment coverage (% of VMs Arc-enabled)

☐ Configure Cost Management

☐ Set up cost allocation by vcenter-source tag

☐ Set up cost allocation by cost-center tag

☐ Generate monthly reports for Finance

☐ Track Arc licensing costs separately from Azure consumption

☐ Documentation and Runbooks

☐ Document Arc architecture decisions

☐ Create runbook for adding new VMs to Arc

☐ Create runbook for troubleshooting agent failures

☐ Create runbook for tag management and updates

☐ Document escalation process for Arc issues

Phase 4: Migration Planning (Week 11+)

☐ Use Arc Data for Migration Planning

☐ Query VMs by migration-wave tag

☐ Identify dependencies between VMs using network data

☐ Assess azure-ready status for each VM

☐ Calculate migration costs (Azure consumption + Arc management)

☐ Build migration timeline by wave

☐ Pilot Migration (vcenter-02 VMs first)

☐ Select 10-20 non-critical dev/test VMs

☐ Migrate to Azure using Azure Migrate

☐ Validate application functionality post-migration

☐ Measure cost differences (vCenter vs Azure)

☐ Document lessons learned

☐ Production Migration Planning

☐ Use vcenter-01 tags to prioritize applications

☐ Start with non-critical production workloads

☐ Keep vcenter-03 DR site as fallback during migration

☐ Monitor Arc data for performance baselines

☐ Plan for hybrid state (some VMs on-prem, some in Azure)

The KQL Queries That Make This Work

Once you have Arc deployed with proper tags, these Resource Graph queries give you the visibility you need.

Query 1: VM Count by vCenter Source

resources

| where type == "microsoft.hybridcompute/machines"

| extend vcenterSource = tags.vcenter_source

| summarize VMCount = count() by vcenterSource

| order by VMCount desc

Output:

vcenter_source VMCount

vcenter-01 450

vcenter-02 280

vcenter-03 150

Now you know exactly how many VMs you have in each vCenter, visible in Azure.

Query 2: Migration Wave Planning

resources

| where type == "microsoft.hybridcompute/machines"

| extend vcenterSource = tags.vcenter_source

| extend migrationWave = tags["migration-wave"]

| extend azureReady = tags["azure-ready"]

| where migrationWave != "" and migrationWave != "not-planned"

| summarize VMCount = count() by migrationWave, azureReady

| order by migrationWave

Output:

migration_wave azure_ready VMCount

wave-1 yes 45

wave-1 needs-assessment 12

wave-2 yes 120

wave-2 no 35

wave-3 yes 200

Now you can plan migration sprints. Wave 1 has 45 VMs ready to migrate immediately, but 12 need assessment first.

Query 3: Cost Allocation by Department

resources

| where type == "microsoft.hybridcompute/machines"

| extend costCenter = tags["cost-center"]

| extend vcenterSource = tags.vcenter_source

| summarize VMCount = count() by costCenter, vcenterSource

| order by costCenter

Output:

cost_center vcenter_source VMCount

FINANCE-001 vcenter-01 80

FINANCE-001 vcenter-02 25

IT-INFRASTRUCTURE vcenter-01 120

IT-INFRASTRUCTURE vcenter-03 150

SALES-EAST vcenter-01 45

Now Finance can allocate Arc management costs back to departments. Finance runs 105 VMs (80 prod + 25 dev), IT Infrastructure runs 270 VMs.

Query 4: Find Untagged VMs (Data Quality Check)

resources

| where type == "microsoft.hybridcompute/machines"

| extend vcenterSource = tags.vcenter_source

| extend costCenter = tags["cost-center"]

| extend appOwner = tags["app-owner"]

| where isnull(vcenterSource) or isnull(costCenter) or isnull(appOwner)

| project name, vcenterSource, costCenter, appOwner, resourceGroup

| order by name

This finds VMs missing required tags. Run this weekly and fix tag gaps before Finance asks for reports.

Query 5: Production VMs by Compliance Scope

resources

| where type == "microsoft.hybridcompute/machines"

| extend environment = tags.environment

| extend complianceScope = tags["compliance-scope"]

| where environment == "production"

| summarize VMCount = count() by complianceScope

| order by VMCount desc

Output:

compliance_scope VMCount

sox 180

pci 45

hipaa 30

none 195

Security can now audit regulatory scope. 180 VMs are SOX-regulated and require specific Azure Policy configurations.

Query 6: Arc Agent Health Status

resources

| where type == "microsoft.hybridcompute/machines"

| extend vcenterSource = tags.vcenter_source

| extend agentStatus = properties.status

| summarize VMCount = count() by vcenterSource, agentStatus

| order by vcenterSource, agentStatus

Output:

vcenter_source agent_status VMCount

vcenter-01 Connected 445

vcenter-01 Disconnected 5

vcenter-02 Connected 278

vcenter-02 Disconnected 2

Monitor agent health by vCenter. If 5 VMs from vcenter-01 are disconnected, investigate network or authentication issues.

What To Do If You Already Deployed Without Planning

Maybe you're reading this after you already deployed Arc to 1,200 VMs with no tags. Everything in one subscription, one resource group, zero governance.

I've been there. Here's how to fix it.

Step 1: Accept Reality

You cannot retroactively apply tags at scale without significant manual effort. There is no "magic script" that automatically discovers application owners and cost centers.

Budget 4-8 weeks to fix this mess.

Step 2: Export Current Inventory

# Export all Arc machines to CSV

az graph query -q "resources | where type == 'microsoft.hybridcompute/machines' | project name, resourceGroup, location, properties.osName" --output table > arc-inventory.csv

Now you have a spreadsheet with all Arc-enabled VMs.

Step 3: Map VMs to Metadata (The Hard Part)

Send this spreadsheet to:

- Finance: "Please fill in cost-center column"

- Application teams: "Please fill in app-owner and application columns"

- Infrastructure: "Please fill in vcenter-source column"

They will not fill it out completely. You'll get 60% coverage if you're lucky.

For the remaining 40%, you need to:

- Cross-reference VM names against CMDBs (if you have one)

- Match VM names to vCenter inventory exports (to determine vcenter-source)

- Make educated guesses based on naming conventions

- Accept that some VMs will remain "unknown" until someone complains

Step 4: Apply Tags Programmatically

# Read CSV with tag data

$VMTagData = Import-Csv -Path "arc-inventory-with-tags.csv"

foreach ($VM in $VMTagData) {

$ResourceId = "/subscriptions/$SubscriptionId/resourceGroups/$($VM.resourceGroup)/providers/Microsoft.HybridCompute/machines/$($VM.name)"

$Tags = @{

"vcenter-source" = $VM.vcenter_source

"cost-center" = $VM.cost_center

"app-owner" = $VM.app_owner

"environment" = $VM.environment

"migration-wave" = $VM.migration_wave

}

# Apply tags

az tag create --resource-id $ResourceId --tags $Tags

}

Step 5: Reorganize Resource Groups (Optional)

If everything is in one resource group and you want to split by vCenter:

# Move vcenter-01 VMs to dedicated resource group

$Vcenter01VMs = az graph query -q "resources | where type == 'microsoft.hybridcompute/machines' and tags.vcenter_source == 'vcenter-01'" --output json | ConvertFrom-Json

foreach ($VM in $Vcenter01VMs.data) {

az resource move --ids $VM.id --destination-group "arc-vcenter-01-rg"

}

Warning: Moving resources can break dependencies like Azure Monitor dashboards and Policy assignments. Test on 5 VMs first.

Step 6: Prevent Future Tag Drift

# Azure Policy to require tags on new Arc machines

{

"mode": "Indexed",

"policyRule": {

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.HybridCompute/machines"

},

{

"field": "tags['vcenter-source']",

"exists": "false"

}

]

},

"then": {

"effect": "deny"

}

}

}

This prevents future Arc deployments without required tags.

Common Arc Implementation Mistakes

After helping multiple organizations deploy Arc, here are the patterns I see repeatedly:

Mistake 1: "We'll Tag Later"

The trap: Deploy Arc agents fast, plan to add tags afterward.

Why it fails: Retroactive tagging requires data you don't have. VM names don't map to applications. Application owners don't respond to surveys. Cost centers changed last year.

The fix: Collect tag data BEFORE deployment. Delay deployment by 4 weeks if needed. Proper tags are worth it.

Mistake 2: Ignoring the Two-Phase Deployment

The trap: Deploy Arc Resource Bridge, assume VMs are now Arc-enabled.

Why it fails: The Resource Bridge makes VMs visible but doesn't install agents. No agents = no management capability.

The fix: Understand that Resource Bridge ≠ Arc agents. Budget time for both phases.

Mistake 3: Using Only OOTB RBAC Roles

The trap: Assign "Contributor" role and start deploying.

Why it fails: Arc requires specific permissions that don't exist in standard roles. Deployments fail with cryptic "access denied" errors.

The fix: Create custom RBAC roles with Arc-specific permissions:

- Microsoft.HybridCompute/machines/read

- Microsoft.HybridCompute/machines/write

- Microsoft.HybridCompute/machines/extensions/write

- Microsoft.HybridCompute/machines/licenseProfiles/write (for ESU)

Mistake 4: One Subscription for Everything

The trap: Put all Arc resources in one subscription to "keep it simple."

Why it fails: You can't separate costs by business unit. Finance can't charge back departments. Migration planning is harder (can't separate prod from dev subscriptions).

The fix: Use subscription strategy aligned with your organization:

- Option A: One subscription per vCenter

- Option B: One subscription per environment (prod/dev/dr)

- Option C: One subscription per business unit

Mistake 5: Assuming WSUS Integration Works

The trap: Enable Arc ESU and expect patches to flow through existing WSUS infrastructure.

Why it fails: WSUS doesn't validate ESU licenses. Patches approve for VMs that can't install them. Compliance reports become garbage.

The fix: Use Azure Update Manager instead of WSUS for Arc-enabled VMs. Query Resource Graph for patch compliance, not WSUS.

Mistake 6: No Monitoring for Agent Health

The trap: Deploy agents and assume they'll stay connected forever.

Why it fails: Agents disconnect due to network issues, certificate expiration, Azure API throttling, TLS configuration changes. Silent failures = VMs stop getting patches.

The fix: Set up Azure Monitor alerts for disconnected agents:

resources

| where type == "microsoft.hybridcompute/machines"

| extend agentStatus = properties.status

| where agentStatus != "Connected"

| project name, agentStatus, resourceGroup, tags

Alert when count > 0.

The Questions to Ask Your Boss Before Deploying Anything

Before you install a single Arc agent, sit down with leadership and get answers to these questions. Email doesn't work. Schedule a meeting. Get decisions.

Question 1: Subscription Strategy

"Do we want one Azure subscription for all Arc resources, or separate subscriptions by vCenter/environment/business unit?"

Why this matters: You can't easily move resources between subscriptions later. This decision is permanent.

Get specific:

- Who pays for Arc costs? (centralized IT budget vs. departmental chargeback)

- How do we separate prod from non-prod spending?

- What happens when we acquire another company? (merger scenario)

Question 2: Tagging Taxonomy

"What metadata do we need to track for every VM? Who will provide this data?"

Why this matters: Without tags, you can't allocate costs, plan migrations, or organize resources.

Get specific:

- "Finance, what cost center codes should we use?" (Get the actual codes, not "we'll figure it out")

- "Application teams, who should we list as app-owner?" (Get email addresses, not "the team")

- "Security, what compliance scopes do we need to track?" (SOX, PCI, HIPAA, etc.)

Question 3: Migration Timeline

"When are we planning to migrate these VMs to Azure? What's the priority?"

Why this matters: If migration is 5+ years away, Arc might not be worth the cost. If migration is 6 months away, Arc helps with planning.

Get specific:

- "Which vCenter do we migrate first?" (Dev/test before production)

- "Which applications are migration priorities?" (Business-critical vs. nice-to-have)

- "What's blocking migration today?" (Technical debt, vendor support, budget)

Question 4: Cost Allocation Model

"How do we charge back Arc management costs to business units?"

Why this matters: Arc costs $5-15 per VM per month. For 1,200 VMs, that's $72K-180K annually. Someone has to pay.

Get specific:

- "Does IT absorb all Arc costs?" (centralized model)

- "Do we charge departments based on VM count?" (chargeback model)

- "Do we allocate based on cost-center tags?" (tag-based allocation)

Question 5: Governance and RBAC

"Who can deploy Arc agents? Who can manage Arc resources? Who can view data?"

Why this matters: Without RBAC planning, everyone becomes an Arc administrator or nobody can do their job.

Get specific:

- "Can application teams deploy their own Arc agents?" (self-service vs. centralized)

- "Can Finance view cost data without full Azure access?" (read-only roles)

- "Who handles Arc support escalations?" (operations model)

If your boss says "yeah yeah, we'll figure that out later" to any of these questions, do not proceed with deployment. You're setting up an unfixable mess.

Push back. Say: "I need these decisions before deployment. Retrofitting governance is 10x harder than planning upfront."

If they still push for fast deployment despite no strategy, document that you raised these concerns. When the mess happens (and it will), you'll need that paper trail.

Resources and Tools

Here are the PowerShell scripts, KQL queries, and templates I use for Arc implementations.

GitHub Repository

I've published a complete Arc implementation toolkit at:

github.com/azure-noob/arc-vcenter-toolkit

Includes:

- PowerShell script for Arc agent deployment with tags

- KQL query library for Resource Graph

- Tagging taxonomy template (Excel)

- Pre-deployment questionnaire for stakeholders

- Cost allocation calculator

- RBAC custom role definitions

Essential PowerShell Functions

Check Arc Agent Status Across All VMs:

function Get-ArcAgentHealth {

az graph query -q @"

resources

| where type == 'microsoft.hybridcompute/machines'

| extend agentStatus = properties.status

| extend lastHeartbeat = properties.lastStatusChange

| extend vcenterSource = tags.vcenter_source

| project name, agentStatus, lastHeartbeat, vcenterSource, resourceGroup

| order by agentStatus desc, name asc

"@ --output table

}

Bulk Tag Application:

function Set-ArcVMTags {

param(

[Parameter(Mandatory=$true)]

[string]$CsvPath,

[Parameter(Mandatory=$true)]

[string]$SubscriptionId

)

$VMData = Import-Csv -Path $CsvPath

foreach ($VM in $VMData) {

$ResourceId = "/subscriptions/$SubscriptionId/resourceGroups/$($VM.resourceGroup)/providers/Microsoft.HybridCompute/machines/$($VM.name)"

$Tags = @{}

if ($VM.vcenter_source) { $Tags["vcenter-source"] = $VM.vcenter_source }

if ($VM.cost_center) { $Tags["cost-center"] = $VM.cost_center }

if ($VM.app_owner) { $Tags["app-owner"] = $VM.app_owner }

if ($VM.environment) { $Tags["environment"] = $VM.environment }

if ($VM.migration_wave) { $Tags["migration-wave"] = $VM.migration_wave }

if ($Tags.Count -gt 0) {

Write-Host "Tagging $($VM.name)..."

az tag create --resource-id $ResourceId --tags $Tags

}

}

}

Find VMs Missing Required Tags:

function Get-UntaggedArcVMs {

az graph query -q @"

resources

| where type == 'microsoft.hybridcompute/machines'

| extend vcenterSource = tags.vcenter_source

| extend costCenter = tags['cost-center']

| extend appOwner = tags['app-owner']

| where isnull(vcenterSource) or isnull(costCenter) or isnull(appOwner)

| project name, vcenterSource, costCenter, appOwner, resourceGroup

| order by name asc

"@ --output table

}

Cost Allocation Queries

Monthly Arc Costs by vCenter:

// Run in Azure Cost Management

AzureConsumption

| where ResourceType == "microsoft.hybridcompute/machines"

| extend vcenterSource = tags["vcenter-source"]

| summarize MonthlyCost = sum(Cost) by vcenterSource, format_datetime(Date, 'yyyy-MM')

| order by Date desc, MonthlyCost desc

Arc Licensing Costs by Department:

AzureConsumption

| where ResourceType == "microsoft.hybridcompute/machines"

| extend costCenter = tags["cost-center"]

| where isnotempty(costCenter)

| summarize TotalCost = sum(Cost) by costCenter

| order by TotalCost desc

The Bottom Line

Azure Arc for multi-vCenter environments is powerful. When implemented correctly, it gives you:

- Unified inventory across all vCenters

- Cloud management for on-premises VMs

- Migration planning visibility

- Cost allocation by department

- Compliance tracking across hybrid infrastructure

But "implemented correctly" requires:

- Strategic planning before deployment (not after)

- Understanding the two-phase deployment model

- Comprehensive tagging from day one

- Weeks of data collection from stakeholders

- Clear governance and RBAC design

The technology is not the challenge. The organizational coordination is the challenge.

If Microsoft is pressuring you to "just deploy Arc fast," push back. Ask for 4 weeks to plan properly. Collect tag data before installing agents. Get leadership decisions on subscription strategy and cost allocation.

If your boss says "yeah yeah, we'll figure out governance later," recognize that you're being set up for failure. Document your concerns. Deploy with proper tags anyway (even if it delays the timeline). Your future self will thank you.

And if you're already in the mess—everything in one subscription, no tags, Finance asking questions you can't answer—know that you're not alone. Every enterprise I've worked with has made some version of these mistakes.

The path forward is clear: export inventory, collect metadata manually, apply tags retroactively, and prevent future tag drift with Azure Policy.

It's painful, but it's fixable.

Just don't make the same mistake twice.

📋 Related: Azure Arc Enterprise Readiness Checklist

Deploying Arc at enterprise scale? Download the complete pre-deployment validation checklist covering governance, networking, cost planning, and vCenter integration.

Download Free Checklist (PDF) | Excel Version

Have questions about Arc implementation? I've probably seen your problem. Drop a comment or check out the Arc vCenter toolkit repository for scripts and templates.

Dealing with a rushed Arc deployment? You're not alone. The pattern repeats across every enterprise. Share your story in the comments—other admins need to know they're not the only ones navigating this mess.

Azure Admin Starter Kit (Free Download)

Get my KQL cheat sheet, 50 Windows + 50 Linux commands, and an Azure RACI template in one free bundle.

Get the Starter Kit →✋ Stop. Don't Migrate Yet.

Answer 55 questions about each application before touching Azure Migrate. This framework prevents the $2M mistakes that kill enterprise migrations.

Get the Migration Assessment FrameworkExcel template • Complete instructions included • No email required