The New Azure Debugging Rule: When AI Says 'I Don't Know'

2025-10-06 · ~11 min read

Using KQL to debug AI-driven alerts, complex rules, and noisy signals in Azure Monitor so your dashboards stop lying to you.

This guide is part of our AI-Assisted Azure Operations hub exploring how AI tools transform cloud administration and productivity workflows.

I used to spend 3 hours debugging Azure issues before opening a support ticket. That was before AI.

Now? If Claude or ChatGPT can't solve my Azure problem in 30 minutes, I open a ticket immediately.

Here's why that's the right call in 2025.

The Old Debugging Workflow (Pre-2023)

When you hit an Azure issue, you'd spend hours doing this:

- Search Microsoft documentation

- Try Stack Overflow

- Dig through GitHub issues

- Read random blog posts

- Trial and error with configurations

- Eventually give up and open a ticket

This made sense because finding information was slow and manual. You had to hunt through fragmented documentation, old forum posts, and pray someone else hit your exact problem.

Three hours of research felt reasonable before escalating to Microsoft support.

The New Reality (2025)

AI has fundamentally changed this equation.

When you paste an Azure error into Claude or ChatGPT, it has already:

- Read every Microsoft doc

- Indexed all of Stack Overflow

- Processed every GitHub issue

- Analyzed thousands of blog posts

- Synthesized patterns from millions of conversations

If AI can't help you, neither can you.

That's your signal. You've hit the boundary of publicly available knowledge.

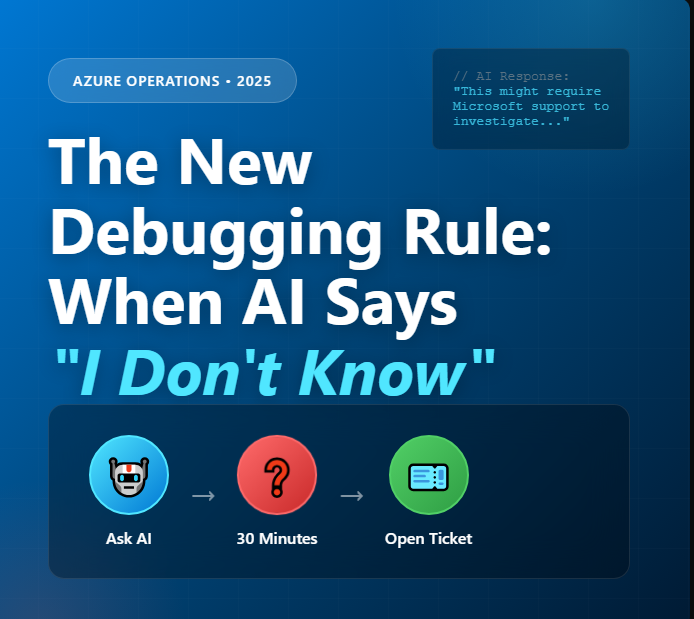

The New Rule

Azure Problem

↓

Ask AI (Claude, ChatGPT, Copilot)

↓

[30 minutes later]

↓

Did AI solve it?

↓ NO

↓

Open Microsoft Support Ticket

Don't keep banging your head against the wall. If the AI assistant that has read everything can't solve it, you need one of two things:

- Microsoft's internal knowledge - information that isn't public

- An actual bug fix - something broken that needs engineering intervention

Either way, you're not going to Google your way out of this one.

What AI is Actually Good At

Before we talk about when AI fails, let's acknowledge what it's excellent at:

Code Generation:

# Ask: "Write a PowerShell script to find all VMs without tags"

# Get: Working code in 10 seconds

Get-AzVM | Where-Object {$_.Tags.Count -eq 0} |

Select-Object Name, ResourceGroupName, Location

KQL Query Building:

// Ask: "Show me VMs that haven't been updated in 90 days"

// Get: Production-ready query

Resources

| where type == "microsoft.compute/virtualmachines"

| extend lastUpdateTime = properties.extended.instanceView.osProfile.windowsConfiguration.lastBootUpTime

| where lastUpdateTime < ago(90d)

| project name, resourceGroup, location, lastUpdateTime

Error Translation:

Error: "The provided credentials have insufficient privileges"

AI Response: "This is actually a role assignment issue. You need

Contributor access at the subscription level. Run:

New-AzRoleAssignment -SignInName user@domain.com -RoleDefinitionName

'Contributor' -Scope '/subscriptions/xxxx'"

Syntax Conversion:

# "Convert this Azure CLI command to PowerShell"

# From: az vm list --query "[?powerState=='VM running']"

# To: Get-AzVM -Status | Where-Object {$_.PowerState -eq 'VM running'}

These are massive time savers. AI is incredible at these tasks.

Where AI Hits the Wall (And You Should Stop)

1. Private Endpoint DNS Configuration

The Problem:

Storage account with private endpoint can't be accessed from on-premises

Error: "Unable to resolve mystorageaccount.blob.core.windows.net"

What AI Says:

"Configure private DNS zones and link them to your VNet. Make sure you have the right DNS forwarder setup."

What Actually Happens:

Microsoft docs say one thing. Your hybrid environment with ExpressRoute, custom DNS, and split-brain configuration requires Microsoft support to sort out the conditional forwarding rules and zone configuration.

This is the boundary. AI knows the theory. It doesn't know your specific environment's DNS topology, firewall rules, and route tables all interacting together.

2. Cross-Subscription Networking Issues

The Problem:

VNet peering between subscriptions works, but private endpoints

in subscription A can't reach resources in subscription B

What AI Says:

"Check your NSG rules, verify the peering is in connected state, ensure service endpoints are configured."

What You Need:

Microsoft engineer with access to backend telemetry to see where packets are actually being dropped in the Azure control plane.

3. Azure Portal Says It Should Work, But Doesn't

The Problem:

You've configured everything exactly as the documentation says. The portal shows green checkmarks. Nothing works.

What AI Says:

"Try redeploying the resource. Check if there are any service health issues. Verify your configuration matches the documentation."

What You Need:

Microsoft support to look at backend logs and discover there's a known issue with that specific region or a service-side configuration that's broken.

4. Undocumented Product Limitations

The Example:

"Why can't I have more than 500 private endpoints in a VNet when the documentation doesn't mention this limit?"

What AI Knows:

What's in the public documentation (which doesn't mention this limit).

What Microsoft Support Knows:

"Yeah, that's a soft limit we don't advertise. We can increase it for your subscription."

The Financial Argument

Let's talk money.

Azure Support Costs:

- Developer: $29/month (or free with many EA agreements)

- Standard: $100/month

- Professional Direct: $1,000/month

Your Hourly Cost:

Let's say you make $100,000/year. That's roughly $50/hour.

The Math:

- Spend 3 hours debugging = $150 of your time

- Open a ticket after 30 minutes = $25 of your time + using support you already pay for

You're literally paying Microsoft for support and then not using it while you waste expensive engineering hours on Google searches.

The Real World Example

Last month I hit an issue with Azure Update Manager. VMs were showing as "unsupported" even though they were running supported Windows Server 2022 builds.

What I Did:

1. Asked Claude: "Why would Update Manager show VMs as unsupported?"

2. Claude gave me the standard checklist: agent version, OS version, connectivity

3. Verified everything - all green

4. Asked Claude again with more details

5. Claude said: "This might be a service-side issue or a known bug"

That was my signal. 30 minutes in, AI couldn't help.

Opened a ticket. Microsoft came back in 2 hours: "Known issue, engineering is working on it, here's the workaround."

Total time invested: 30 minutes of debugging + 5 minutes to open ticket + 10 minutes to implement workaround.

If I'd kept debugging? Could have been days before I stumbled on the answer (or more likely, never found it).

When AI Says "I Don't Know"

Here are the AI responses that should trigger an immediate support ticket:

Red Flag Phrases:

- "This might require Microsoft support to investigate"

- "I'd need to see backend logs to determine the cause"

- "This could be a service-side issue"

- "There may be an undocumented limitation"

- "I don't have enough information about your specific environment"

- "This might be a known issue Microsoft is tracking"

When AI starts hedging or asking for information it can't access, you're done. Open the ticket.

How to Use AI Effectively for Azure Debugging

Good Use of AI:

Problem: Need to write a policy that prevents public IP creation

AI Request: "Write an Azure Policy that denies creation of public IPs"

Result: Working policy definition in 30 seconds

Bad Use of AI:

Problem: Private endpoint works from some VMs but not others

AI Request: "Why doesn't my private endpoint work from all VMs?"

Result: 45 minutes of back-and-forth with generic troubleshooting

The second scenario is a complexity problem. Too many variables, environmental-specific, requires telemetry AI doesn't have access to.

The Improved Workflow

Here's what debugging looks like now:

For Simple Problems (90% of cases):

1. Paste error/question into Claude/ChatGPT

2. Get answer

3. Implement solution

4. Done in 5-10 minutes

For Complex Problems (10% of cases):

1. Paste error/question into Claude/ChatGPT

2. Try suggested solutions for 20-30 minutes

3. If not resolved, open Microsoft support ticket with:

- Original problem

- AI's suggestions

- What you've already tried

4. Get actual resolution from Microsoft

Key Point: You're not wasting Microsoft's time. You're using the support you pay for when you hit the limits of publicly available knowledge.

What to Include in Your Support Ticket

Since you've already worked with AI, your ticket should be gold:

Subject: Private endpoint DNS resolution failing from on-premises

Environment:

- Azure subscription: xxxx

- Region: East US

- ExpressRoute circuit: xxxx

Problem:

Cannot resolve privatelink.blob.core.windows.net from on-premises

network. Resolution works from Azure VMs.

Already Verified:

- Private DNS zone correctly configured

- VNet link in place

- DNS forwarder rules configured (per AI assistance)

- No NSG blocks on port 53

- ExpressRoute peering is connected

AI Diagnostic:

Consulted with Claude - suggested standard DNS configuration which

is already in place. AI indicated this may require backend telemetry

to diagnose.

Request:

Need Microsoft engineer to review DNS query path and identify where

resolution is failing in the hybrid environment.

See what you did there? You've already done the Level 1 troubleshooting. You're handing Microsoft a well-documented problem that's ready for their Level 2/3 engineers.

The Exceptions

When You Should Keep Debugging:

- You're learning Azure (educational value in the struggle)

- It's 2 AM and you need a fix NOW (while waiting for ticket response)

- You have a hunch based on past experience

- The problem is clearly your mistake (wrong config, typo, etc.)

When You Should Immediately Ticket:

- Production is down

- Security incident

- Data loss scenario

- Anything where time = money at scale

The Culture Shift

Some Azure admins feel like opening a ticket is "giving up" or admitting defeat.

That's old thinking.

In 2025, with AI available, the calculus has changed:

Old Mindset: "I should be able to figure this out"

New Mindset: "I've exhausted public knowledge in 30 minutes with AI. Time to access Microsoft's internal knowledge."

You're not giving up. You're being efficient.

What About Learning?

"But won't I learn more by debugging longer?"

Yes and no.

You'll learn more about what DOESN'T work. You'll build frustration tolerance. But you won't necessarily learn the RIGHT answer if it's not publicly documented.

Better approach:

1. Use AI to solve it quickly OR

2. Open ticket and get the right answer

3. THEN spend time understanding the solution

4. Document it for your team/blog

You learn more from studying the correct solution than from hours of incorrect debugging.

The Bottom Line

AI hasn't made Microsoft support obsolete. It's made it more valuable.

AI filters out the 90% of problems that ARE documented somewhere. When AI says "I don't know," you've found the 10% that requires Microsoft's internal expertise.

The new debugging rule is simple:

If Claude can't solve your Azure problem after 30 minutes, open a support ticket.

You're not wasting anyone's time. You're using the tools available to you efficiently - both AI and Microsoft support - to solve problems faster.

Stop debugging. Start shipping.

What's your debugging workflow look like in 2025? Hit me up - I'm genuinely curious how other Azure admins are using AI in their daily work.

Azure Admin Starter Kit (Free Download)

Get my KQL cheat sheet, 50 Windows + 50 Linux commands, and an Azure RACI template in one free bundle.

Get the Starter Kit →📊 Stop Rewriting the Same KQL Queries

Get 45+ production-ready KQL queries for Azure Resource Graph, Log Analytics, and Activity Logs. Copy-paste ready with comments and business context.

Download Complete Query LibraryPDF format • No email required • Instant download