The Three AI Operations Roles That Don't Exist Yet (But Will in 12 Months)

2025-11-17 · ~24 min read

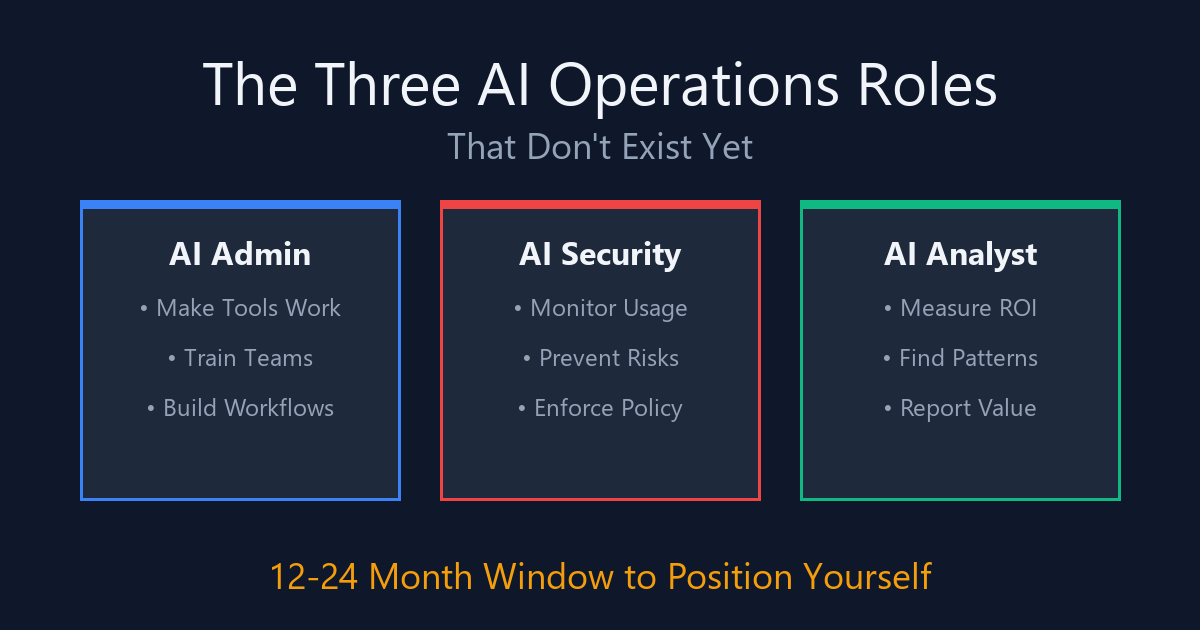

Search for 'AI operations governance jobs' and find nothing. Meanwhile someone has to monitor AI usage, prevent security disasters, and measure ROI. These three roles don't exist officially. They will soon.

The Three AI Operations Roles That Don't Exist Yet (But Will in 12 Months)

This guide is part of our AI-Assisted Azure Operations hub exploring how AI tools transform cloud administration and productivity workflows.

I searched for "AI operations governance jobs" on LinkedIn.

Found nothing.

Searched for "AI security engineer" focused on AI tool governance.

Found generic cybersecurity roles. Nothing about monitoring ChatGPT usage.

Searched for "AI business analyst" for operations intelligence.

Found data science roles. Nothing about measuring AI efficiency in Azure operations.

Meanwhile, at my company - a regulated enterprise - we're adopting AI tools for Azure operations with zero formal governance structure.

Someone needs to monitor what people are asking AI.

Someone needs to prevent credentials from being pasted into ChatGPT.

Someone needs to measure if AI tools are actually worth the investment.

Those three "someones" aren't official roles yet.

But they will be.

And if you position yourself now, you'll define what those roles become.

The Problem Nobody's Formally Solving

Here's what's happening right now at most enterprises:

Engineers are using AI tools:

- ChatGPT for PowerShell generation

- Claude for KQL query writing

- GitHub Copilot for infrastructure code

- AI assistants for troubleshooting

Security teams are worried:

- "What if someone pastes credentials?"

- "How do we audit this?"

- "What data is leaving our environment?"

- Quietly freaking out, no formal response

Leadership wants metrics:

- "What's the ROI?"

- "Are we getting efficiency gains?"

- "Should we invest more?"

- Nobody can answer with data

Compliance needs documentation:

- "Show the examiner our AI governance"

- "Prove we have controls in place"

- "Document the audit trail"

- Scrambling when asked

So who's responsible for all of this?

IT Operations? They enable tools, don't govern usage.

Security? They block threats, don't optimize efficiency.

Business Intelligence? They measure outcomes, don't understand AI.

Answer: Nobody.

The work is falling between organizational cracks.

Test This Yourself (Don't Take My Word)

Experiment 1: Search for these job titles

Go to LinkedIn right now. Search for:

- "AI Operations Governance"

- "AI Security Engineer" + "ChatGPT monitoring"

- "AI Business Analyst" + "operations intelligence"

What you'll find:

- Generic IT operations roles

- Traditional cybersecurity positions

- Data science analyst jobs

What you WON'T find:

- Specific AI governance positions

- Roles monitoring AI tool usage

- Jobs measuring AI operational efficiency

The roles don't exist officially. The work is happening anyway.

Experiment 2: Ask your own organization

Ask your security team: "Who's monitoring what people ask ChatGPT about our Azure environment?"

Ask your leadership: "What's the ROI of our AI tool investments?"

Ask your IT operations: "Who trains people on using AI tools correctly?"

Answers are probably:

- "Nobody specifically"

- "We don't have metrics on that"

- "People just figure it out"

That's the gap.

Experiment 3: Check Microsoft's direction

Look at what Microsoft is building:

- GitHub Copilot integration in Azure Portal (already live)

- Copilot for Azure (general availability coming)

- AI-generated scripts in Cloud Shell (preview)

- AI assistants everywhere in the platform

They're not building this for fun.

They're building this because enterprises will adopt it at scale.

And when enterprises adopt something at scale, someone has to govern it.

Why One "AI Admin" Role Doesn't Work

Initial assumption: "We need one person who governs AI usage."

That's what I thought too.

Then I mapped out what that person would actually DO every day:

Morning:

- Troubleshoot why Copilot isn't working for three engineers

- Review security alerts about suspicious AI usage

- Build a dashboard showing AI efficiency gains for leadership meeting

Afternoon:

- Train new team on prompt engineering

- Investigate possible credential leakage incident

- Analyze usage patterns to identify knowledge gaps

These are three completely different jobs.

Making AI tools work requires Azure expertise and training skills.

Preventing AI disasters requires security operations and risk assessment.

Measuring AI value requires data analysis and business intelligence.

Different skill sets. Different success metrics. Different reporting structures.

Enterprises don't create jobs that report to three different executives and require three unrelated skill sets.

They create three distinct roles.

Role 1: The AI Admin

What this person actually does every day:

Monday morning:

"Hey, why isn't Copilot working in my Azure Portal?"

→ Troubleshoot authentication issues

→ Fix RBAC permissions

→ Document the solution

Tuesday afternoon:

"I need a PowerShell script that creates VMs with our company's tagging standards. Can you help?"

→ Build prompt template that includes compliance requirements

→ Test it generates correct output

→ Add to shared prompt library

Wednesday:

"We hired three new engineers. They need to learn AI-augmented workflows."

→ Run training session

→ Demonstrate effective prompting

→ Share best practices

Thursday:

"Everyone keeps asking AI the same ExpressRoute question. Can we make this easier?"

→ Create reusable prompt template

→ Document common patterns

→ Add to knowledge base

Friday:

"The AI generated script broke our naming convention."

→ Fix the prompt template

→ Add validation

→ Update documentation

This is operations work. Making tools work. Training people. Building reusable patterns.

Technical Responsibilities

Tool deployment and maintenance:

- Deploy Azure OpenAI services

- Configure GitHub Copilot for teams

- Maintain AI tool integrations

- Troubleshoot when things break

- Manage access and permissions

Prompt library development:

- Create templates for common tasks

- Include compliance requirements

- Add security guardrails

- Test for accuracy

- Version control and documentation

Workflow integration:

- Combine AI with existing automation

- Build AI-augmented processes

- Create validation frameworks

- Document standard operating procedures

Training and enablement:

- Teach effective prompt engineering

- Share best practices

- Create documentation

- Answer questions

- Build internal community

Skills Required

Azure operations expertise:

You need to know what good PowerShell looks like to validate AI output.

You need to understand Azure architecture to build correct prompts.

You need operations experience to know what workflows make sense.

Prompt engineering:

Not "ask ChatGPT for help."

"Build production-ready prompts that include our compliance requirements, security standards, naming conventions, and operational constraints."

Training ability:

You're teaching people how to use AI effectively.

Not everyone learns the same way.

You need to explain complex concepts clearly.

Tool administration:

Deploy services. Manage access. Troubleshoot issues.

Standard IT operations work, but for AI tools.

Success Metrics

Adoption rate:

How many people are using AI tools?

Are they using them effectively?

Are they getting value?

Time savings:

Measured reduction in manual work.

Documented efficiency gains.

Real metrics, not vibes.

Output quality:

Is AI-generated code production-ready?

Are people fixing AI output constantly or using it as-is?

Quality indicator of prompt library effectiveness.

Training completion:

Did people complete training?

Are they applying what they learned?

Reduction in basic questions over time?

What This Role Is Called Right Now

Current placeholder titles:

- "Azure Operations Engineer" (with AI as side project)

- "Cloud Automation Lead" (AI falls to them)

- "Senior Infrastructure Engineer" (whoever seems technical)

What this becomes:

- "AI Operations Administrator"

- "AI-Augmented Infrastructure Lead"

- "Cloud AI Operations Engineer"

Salary prediction:

$90K-$130K depending on enterprise size and location.

Similar to current Azure administrator roles.

Premium for AI specialization.

This role emerges first. It's the most obvious gap.

Role 2: The AI Security Engineer

What this person actually does every day:

Monday morning:

Dashboard alert: "User pasted 40-character string matching credential pattern into ChatGPT"

→ Review the incident

→ Confirm it was an access key

→ Revoke the credential

→ Document for compliance

→ Talk to the user about proper procedures

Tuesday:

Pattern detection: "Contractor account asked 89 questions in one hour"

→ Unusual behavior flag

→ Review question content

→ All questions about privileged access

→ Escalate to security operations

→ Account temporarily restricted

Wednesday:

Policy violation: "User asked AI how to bypass NSG rules"

→ Automatic flag

→ Review context (legitimate troubleshooting or actual attempt?)

→ Log the incident

→ Follow up with user

→ Update training materials

Thursday:

Validation request: "AI generated this PowerShell to modify RBAC. Approve for production?"

→ Review against security policies

→ Check for privilege escalation

→ Verify compliance with access control standards

→ Approve with documentation

Friday:

Quarterly compliance report: "Show examiner our AI governance controls"

→ Generate audit trail

→ Demonstrate policy enforcement

→ Show incidents detected and resolved

→ Prove controls are working

This is security work. Monitoring. Risk detection. Policy enforcement. Incident response.

Technical Responsibilities

Usage monitoring:

- Real-time tracking of AI interactions

- Pattern detection for suspicious behavior

- Alerting on policy violations

- Continuous compliance monitoring

Data protection:

- Prevent credential leakage to AI systems

- Block PII from being sent to external APIs

- Enforce data classification policies

- Monitor for sensitive information exposure

Access control:

- RBAC for AI tool access

- User authentication and authorization

- Privileged access monitoring

- Contractor and vendor oversight

Incident response:

- Investigate security alerts

- Respond to policy violations

- Coordinate with security operations

- Document and report incidents

Validation frameworks:

- Review AI-generated code before production

- Check for security vulnerabilities

- Enforce compliance requirements

- Approve or reject based on risk

Skills Required

Security operations background:

This isn't "I read about security."

This is "I've responded to incidents, understand threats, know how attackers think."

Threat detection:

Pattern recognition in usage data.

Understanding what normal looks like.

Identifying anomalies quickly.

Policy enforcement:

Creating rules that are effective but not annoying.

Balancing security with productivity.

Communicating why policies exist.

Risk assessment:

"Is this usage pattern risky or just unusual?"

"Is this violation intentional or accidental?"

Context matters. Judgment required.

Azure security expertise:

RBAC, Defender, Sentinel, access controls.

You need to understand the environment you're protecting.

AI capabilities and risks:

Understanding what AI can and can't do.

Knowing common failure modes.

Recognizing when AI suggestions are dangerous.

Success Metrics

Incidents prevented:

Caught credential exposure before it reached external systems.

Blocked policy violations before they happened.

Detected suspicious patterns before damage occurred.

Policy compliance rate:

Percentage of AI usage following approved patterns.

Reduction in violations over time.

Demonstrated control effectiveness.

Mean time to detect:

How quickly security issues are identified.

How fast incidents are responded to.

Efficiency of monitoring systems.

Risk reduction:

Measurable decrease in security exposure.

Demonstrated value to leadership.

Compliance requirements met.

What This Role Is Called Right Now

Current placeholder titles:

- "Security Analyst" (AI monitoring added to duties)

- "Cloud Security Engineer" (governance tacked on)

- "Compliance Specialist" (monitoring AI usage)

What this becomes:

- "AI Security Operations Engineer"

- "AI Governance and Risk Manager"

- "AI Security Monitoring Specialist"

Salary prediction:

$110K-$160K with security premium.

Higher than general security roles.

Reflects specialized knowledge of AI risks.

This role emerges second. Security can't ignore AI adoption forever.

Role 3: The AI Analyst

What this person actually does every day:

Monday morning:

Leadership meeting prep: "Show me AI ROI for Q4"

→ Pull usage data

→ Calculate time savings

→ Convert to dollar value

→ Build executive dashboard

→ "AI tools saved 147 hours this month = $22K in labor costs"

Tuesday:

Pattern analysis: "Why are so many people asking about Azure tagging?"

→ 45% of questions involve tagging

→ This indicates documentation or training gap

→ Recommendation: Create tagging guide or automate tagging

→ Potential savings: 67 hours/month if addressed

Wednesday:

Cost analysis: "Are AI tools worth what we're paying?"

→ Azure OpenAI costs: $840/month

→ GitHub Copilot: $390/month

→ Time saved: 147 hours = $22K value

→ ROI: 18x return on investment

→ Recommendation: Expand usage

Thursday:

Knowledge gap identification: "ExpressRoute questions spiked last week"

→ 23 people asked similar questions

→ This shouldn't be that common

→ Indicates training need or documentation gap

→ Recommendation: Targeted training session

→ Potential time savings: 46 hours/month

Friday:

Executive reporting: "AI Adoption Dashboard for leadership"

→ Usage by team

→ Efficiency gains

→ Cost vs. value

→ Recommendations for expansion

→ Risks and concerns

This is business intelligence work. Analysis. Insights. Executive communication.

Technical Responsibilities

Usage analytics:

- Track who's using AI tools

- What they're asking

- How often

- What value they're getting

ROI measurement:

- Calculate time savings

- Convert to dollar value

- Compare to tool costs

- Demonstrate business value

Pattern identification:

- What questions are most common?

- Where are knowledge gaps?

- What workflows are being automated?

- What's working and what isn't?

Executive reporting:

- Dashboard development

- Business intelligence visualization

- Leadership presentations

- Recommendations for optimization

Cost optimization:

- Tool spend analysis

- Value vs. cost comparison

- Identify waste or inefficiency

- Recommend budget allocation

Skills Required

Data analysis:

Working with usage logs and metrics.

Finding patterns in the data.

Statistical analysis when needed.

Business intelligence:

Dashboard development (Power BI, Tableau, whatever).

Visualization best practices.

Making data tell a story.

Azure operations understanding:

You need context to analyze usage correctly.

"Is this a lot of questions or normal?"

"Is this time savings significant or marginal?"

Executive communication:

Translating technical metrics into business value.

Speaking leadership's language.

Making recommendations they can act on.

Cost and value analysis:

Calculating ROI accurately.

Understanding total cost of ownership.

Comparing alternatives fairly.

Success Metrics

ROI quantified:

Clear demonstration of value.

Leadership can make informed decisions.

Budget justified or expanded.

Insights delivered:

Actionable recommendations provided.

Knowledge gaps identified.

Training needs documented.

Accuracy of recommendations:

Did your suggestions actually work?

Were training needs real?

Was tool expansion justified?

Executive satisfaction:

Are leaders getting the information they need?

Can they make decisions based on your reports?

Are you invited to strategic discussions?

What This Role Is Called Right Now

Current placeholder titles:

- "Business Analyst" (tracking AI usage as side project)

- "Operations Intelligence Analyst" (AI metrics added)

- "BI Developer" (dashboards include AI now)

What this becomes:

- "AI Operations Intelligence Analyst"

- "AI Business Intelligence Lead"

- "AI Value Measurement Specialist"

Salary prediction:

$95K-$140K in analytics range.

Similar to business intelligence roles.

Premium for AI specialization.

This role emerges third. After companies adopt AI and security is handled, they want to optimize.

How Companies Will Actually Staff This

Reality varies by company size:

Small companies (<500 people):

One person wears all three hats.

Usually falls to IT operations lead.

"You're technical, you figure out this AI stuff."

Works until AI usage scales beyond one person.

Mid-size companies (500-2000):

AI Admin hired first (operations need).

Security engineer adds AI monitoring (risk requirement).

Analyst function shared with existing BI team (metrics request).

Formal coordination meetings start.

Roles still not completely distinct.

Large enterprises (2000+):

All three as distinct positions.

Each with dedicated budget.

Clear reporting structures.

Formal collaboration processes.

AI Admin reports to Infrastructure/Operations.

AI Security Engineer reports to InfoSec.

AI Analyst reports to Business Intelligence or Operations Analytics.

Different organizations. Different metrics. Different priorities.

This is how enterprises work.

Why These Roles Will Definitely Exist

Pattern recognition from previous technology shifts:

VMware Administration (2000-2010)

Year 2000:

"Physical servers are how real infrastructure works."

"Virtualization is just for dev/test environments."

"We don't need dedicated VMware admins."

Year 2005:

Some companies hiring "virtualization specialists."

Most companies: "The server admin can handle it."

Year 2010:

Every enterprise has dedicated VMware administrators.

Distinct career path.

Formal training and certifications.

Market-rate salaries established.

Result: Physical server admin roles mostly eliminated.

Cloud Architecture (2010-2020)

Year 2010:

"Cloud is not for production workloads."

"We need on-premises control."

"We don't need cloud architects."

Year 2015:

Some companies hiring "cloud engineers."

Most companies: "The infrastructure team can handle it."

Year 2020:

Every enterprise has cloud architects.

Distinct specialization.

High demand, established salaries.

Multiple certification paths.

Result: Datacenter operations roles largely eliminated.

DevOps Engineering (2010-2020)

Year 2010:

"Developers develop, operations deploys."

"We don't need people who do both."

"DevOps is a philosophy, not a job."

Year 2015:

Some companies hiring "DevOps engineers."

Most companies: "We already have dev and ops teams."

Year 2020:

DevOps engineer is standard role.

Clear responsibilities.

Defined skill sets.

Competitive salaries.

Result: Traditional sysadmin roles transformed.

AI Operations Governance (2025-2027)

Year 2025 (now):

"AI is just a tool people use."

"We don't need dedicated AI governance."

"Someone can monitor it as a side project."

Year 2026 (prediction):

Some companies hiring "AI operations" roles.

Most companies: "The ops/security/BI team can handle it."

Year 2027 (prediction):

Every enterprise has AI operations roles.

Three distinct functions.

Formal job descriptions.

Market-rate salaries.

Result: Manual operations roles transform or disappear.

The pattern always plays out the same way:

- New technology emerges

- "This won't require new roles"

- Early adopters prove otherwise

- Mainstream slowly accepts reality

- Role formalizes

- People who positioned early win

We're at step 2 right now.

What These Roles Actually Look Like Working Together

Real scenario: AI-generated PowerShell for production deployment

The Workflow

Engineer submits request:

"I need PowerShell to deploy 50 VMs across three subscriptions with specific tagging and security requirements."

AI Admin involvement:

→ Uses validated prompt template from library

→ Template includes compliance requirements automatically

→ Generates PowerShell script

→ Initial validation (syntax, logic, standards)

→ Forwards to security review

AI Security Engineer involvement:

→ Reviews AI-generated script

→ Validates against security policies

→ Checks for privilege escalation risks

→ Confirms compliance requirements met

→ Approves with documentation

→ Logs approval in audit trail

AI Analyst involvement:

→ Records time saved (15 minutes vs. 2 hours manual)

→ Tracks this as common pattern

→ Notes prompt template effectiveness

→ Adds to ROI calculation

→ Identifies this as high-value use case

Result:

- Script deployed in 20 minutes (vs. 2+ hours manual)

- Security validated and documented

- Compliance requirements met

- Time savings measured

- Best practice captured for future use

Three roles. One workflow. All necessary.

Without AI Admin: No validated prompt template, engineer struggles.

Without AI Security Engineer: Script might violate policies, no audit trail.

Without AI Analyst: No measurement of value, can't justify continued investment.

Each role essential to the process.

What This Means If You Position Yourself Now

Current state (November 2025):

Roles don't exist officially.

No certifications or formalized training.

No established salary bands.

No standard job descriptions.

Work is happening anyway.

Falling to whoever seems capable.

Ad-hoc assignments.

No career path.

12-24 months from now (2026-2027):

Roles get formalized.

Job descriptions written.

Salary bands established.

Training and certification emerge.

Career paths defined.

The opportunity:

People who position NOW will:

- Define what the roles become

- Set salary expectations (first hires establish market rate)

- Become the "experts" everyone else learns from

- Get hired before public job postings

- Skip the "entry-level" phase

Historical example: Early Cloud Architects

2012-2014:

People doing cloud architecture work without title.

No formal training programs.

No established career path.

Those people:

- Defined what "cloud architect" meant

- Established market rates ($120K-$180K)

- Became the experts everyone else learned from

- Published books, created courses

- Led practices at consulting firms

2015-2020:

Role formalized.

Training programs emerged.

Certifications created.

Market flooded with candidates.

Early positioners became VPs and Principal Architects.

Later entrants fought for associate positions.

Same pattern will play out with AI operations roles.

First movers set the standard. Latecomers follow it.

Where to Actually Start

Step 1: Pick which role fits your current skills

If you're operations-focused:

You probably already troubleshoot tools and train people.

AI Admin is the natural path.

Start:

- Use AI tools for your daily work

- Build prompt templates as you go

- Document what works

- Share with your team (informally)

If you're security-focused:

You already monitor threats and enforce policies.

AI Security Engineer is the natural path.

Start:

- Monitor what your team asks AI

- Flag potential issues you notice

- Build simple validation checklists

- Document policy violations

If you're analytics-focused:

You already work with data and build reports.

AI Analyst is the natural path.

Start:

- Track your own AI usage and time savings

- Build simple dashboards

- Calculate ROI for your team

- Present findings to leadership

Pick the one that fits your current role. Don't try to do all three.

Step 2: Do the work before the title exists

For AI Admin path:

Week 1-4: Use AI daily

- ChatGPT, Claude, Copilot - whatever works

- Real tasks, not toy examples

- Document what you learn

Week 5-8: Build templates

- Create reusable prompts

- Include your compliance requirements

- Test for accuracy

- Start a shared library

Week 9-12: Train others

- Show teammates how you're using AI

- Share your prompt templates

- Answer questions

- Build internal expertise

For AI Security Engineer path:

Week 1-4: Observe and document

- What are people asking AI?

- Any security concerns?

- Where are the risks?

- Document patterns

Week 5-8: Build checklists

- What should be validated?

- What's high risk vs. low risk?

- Create simple frameworks

- Test with real examples

Week 9-12: Implement monitoring

- Even informal tracking helps

- Log concerning patterns

- Report to security team

- Demonstrate the need

For AI Analyst path:

Week 1-4: Track your usage

- Time saved on tasks

- What AI helps with most

- Where it doesn't help

- Calculate your personal ROI

Week 5-8: Expand to team

- Ask teammates about their usage

- Aggregate the data

- Find patterns

- Calculate team ROI

Week 9-12: Build dashboard

- Visualize the findings

- Show leadership

- Make recommendations

- Demonstrate value

You're not waiting for permission. You're doing the work and documenting results.

Step 3: Document everything publicly

Blog posts about what you're learning.

GitHub repos with tools you're building.

Conference talks or local meetups.

When the role gets formalized, you're the expert who documented the emergence.

What to document:

For AI Admin:

- Prompt templates that work

- Training materials you created

- Common mistakes and solutions

- Best practices you discovered

For AI Security Engineer:

- Security risks you identified

- Validation frameworks you built

- Policy violations you caught

- Monitoring approaches that work

For AI Analyst:

- ROI calculations and methods

- Usage patterns you found

- Insights that drove decisions

- Dashboard designs that work

You're creating the training materials that will be used when these roles formalize.

Step 4: Position for the formal role

When your company creates the position:

- You've been doing the work for 12+ months

- You have documented results to show

- You have established expertise

- You're the obvious choice

When OTHER companies create the position:

- You have portfolio of work

- You have blog/GitHub presence

- You have demonstrable expertise

- You're the external hire they want

This is your 12-24 month window.

What I'm Actually Doing About This

Not making predictions. Running experiments.

This month:

- Using AI for 50+ Azure operations tasks

- Tracking success rate and time savings

- Building validation frameworks

- Documenting what works and what doesn't

Next 3 months:

- Define all three roles in detail (three separate posts coming)

- Build platform that enables these roles

- Test in production environment

- Document results publicly

Next 6-12 months:

- Establish expertise in AI operations governance

- Build tools that others can use

- Create training materials

- Position for formal role emergence

Goal:

When these roles get formalized, I want to be the person who defined them.

Not because I have it all figured out.

Because I'm documenting the transition in real-time.

Learning publicly. Building tools. Sharing results.

The Three Follow-Up Posts

Coming in the next few weeks:

Post 1: "The AI Admin: Making AI Work in Enterprise Operations"

Deep dive on this specific role:

- Detailed day-to-day responsibilities

- Required technical skills

- How to build prompt libraries

- Training approaches that work

- Career path and positioning

Post 2: "The AI Security Engineer: Governing AI in Regulated Environments"

Security-specific focus:

- Risk monitoring frameworks

- Policy enforcement approaches

- Compliance requirements

- Incident response procedures

- Validation methodologies

Post 3: "The AI Analyst: Measuring AI's Business Impact"

Analytics and BI focus:

- ROI calculation methods

- Usage pattern analysis

- Executive reporting approaches

- Dashboard design

- Business intelligence techniques

Each post goes deep on one role.

This post establishes the category.

The Bottom Line

These aren't predictions. These are patterns:

AI tools ARE being adopted for operations (verifiable, happening now).

Someone HAS to govern this (work exists, undeniable).

Current roles DON'T fully cover it (organizational gap, clear).

Enterprises WILL formalize these functions (historical pattern, established).

The timeline:

Now: Work falls between organizational cracks.

6 months: Ad-hoc assignments to whoever seems capable.

12 months: Some companies create formal roles.

24 months: Standard job titles across industry.

The opportunity:

Position yourself BEFORE roles are formalized.

Define what the roles become.

Be the expert when companies start hiring.

Skip the "entry level" phase entirely.

The choice:

Wait until roles are official → compete with everyone else.

Position now → define the standard others follow.

First movers set the market. Latecomers follow.

Already doing AI operations work without a formal title? Tell me which of these three roles matches what you're actually doing.

Think this is overblown? Search for these job titles yourself. Then check back in 12 months and see if I was right.

Questions about positioning for these roles? Drop them below. We're all documenting this transition together.

Azure Admin Starter Kit (Free Download)

Get my KQL cheat sheet, 50 Windows + 50 Linux commands, and an Azure RACI template in one free bundle.

Get the Starter Kit →🚀 Azure Admin Starter Kit

Get the KQL cheat sheet, 50 Windows + 50 Linux commands, and Azure RACI template in one free bundle. Built from managing 44 subscriptions and 31,000+ resources.

Get the Starter Kit →Free download • No email required • Instant access